Introduction

ChatGPT and other AI chatbots have been getting a lot of attention lately. They are extremely helpful in getting information, responding to custom queries, and can even be used to write code along with other common daily tasks. They represent an exciting new digital frontier but from a security perspective, they also represent yet another place for your corporate data to go. Netskope’s inline Next-gen Secure Web Gateway capabilities can block posting of sensitive data to collaboration tools such as Slack and Teams out of the box. It got me to thinking that since ChatGPT is essentially a chat system, why can’t we use a similar capability to block posting sensitive information to ChatGPT? As one quick tangent, I also want to give OpenAI some credit as they also warn the user that it’s not appropriate to put personal data into their platform:

That being said, this is as after I already posted the data and relies on ChatGPT recognizing the data as sensitive which may not be the case with other sensitive data types. As one final note, this article was written based on an “All Web” steering configuration. If you use Netskope for inline CASB (SaaS) than you may need to add additional domains to the custom app we will create later on. Let’s start at the top and walk through different approaches Netskope can take to securing ChatGPT.

The Legacy Proxy Approach: Block the URL

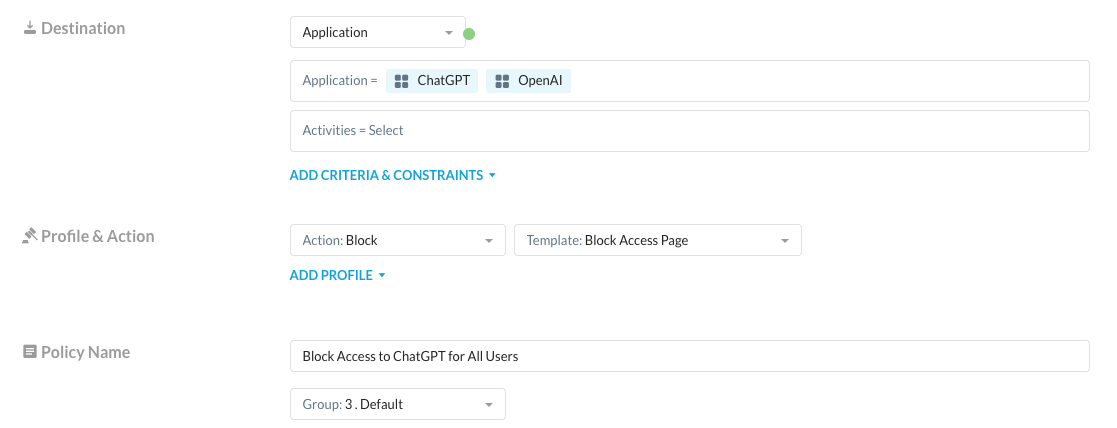

This is the most heavy handed approach but unfortunately, it’s the only way that most Next Generation Firewalls and legacy proxies can handle this case. It’s a simple allow or block mechanism. Netskope can, of course, block the category and URL for ChatGPT with a simple policy. For this case, it’s as simple as creating a policy to block ChatGPT and OpenAI entirely:

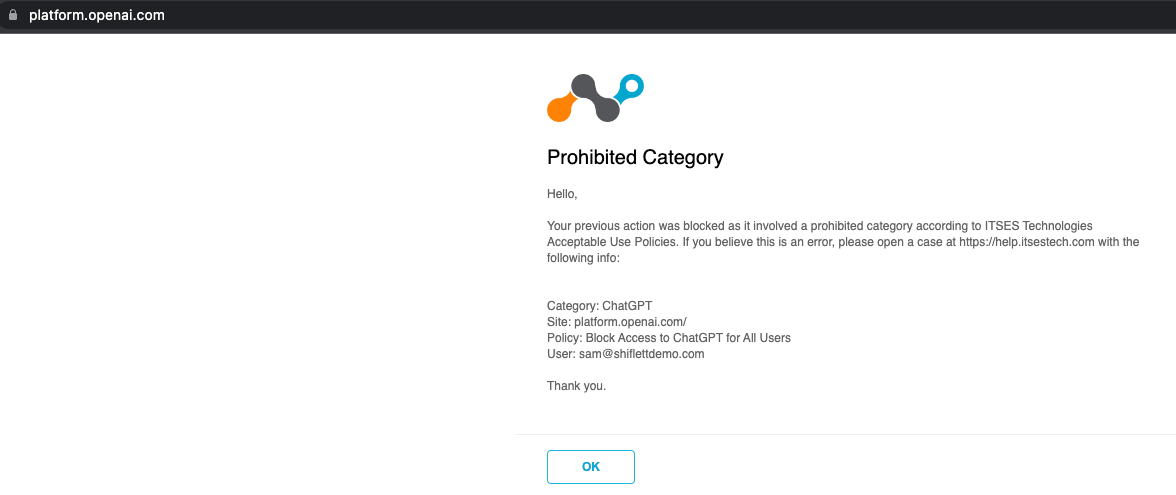

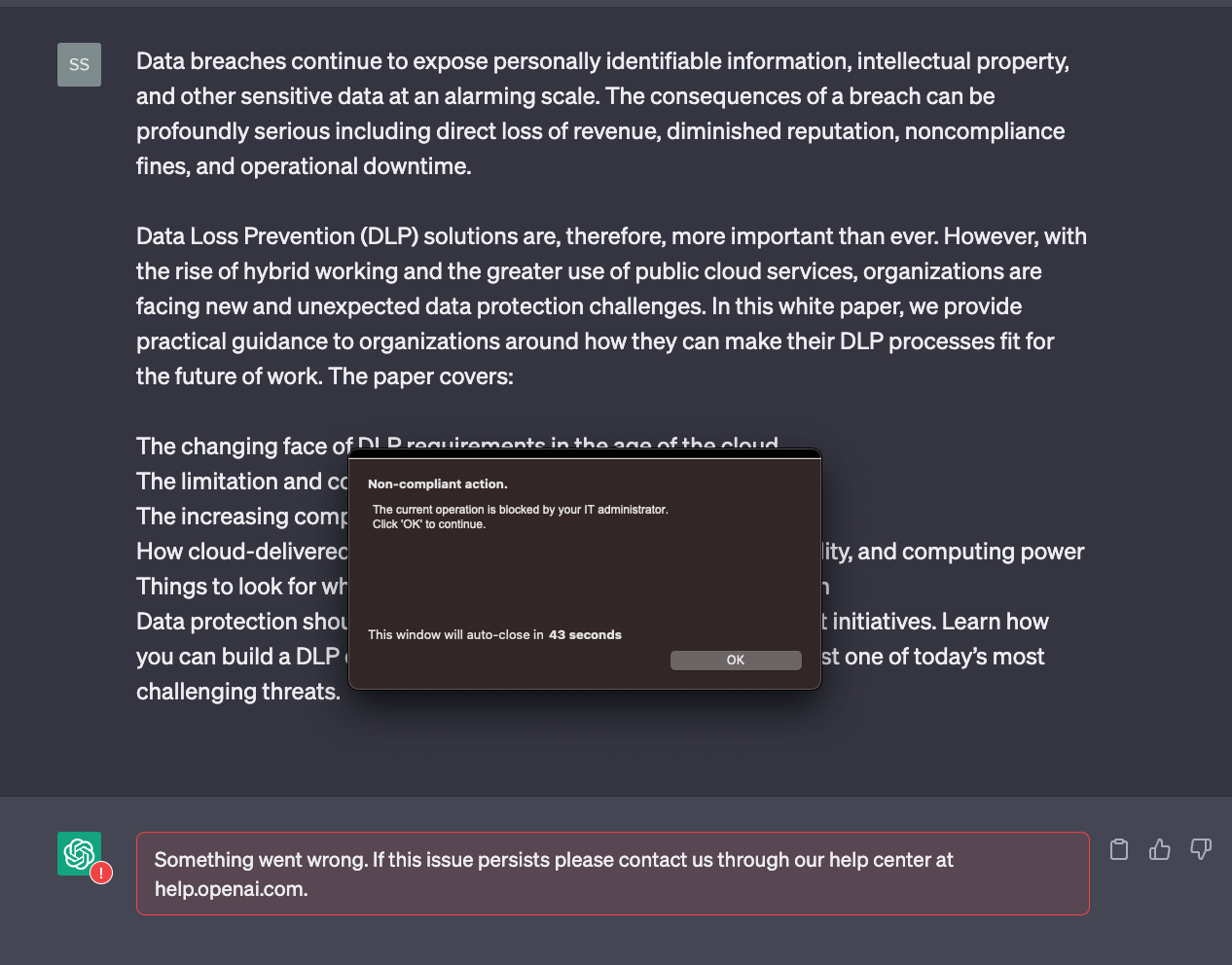

Now when any of my users attempt to access ChatGPT, they will be blocked:

You can also block all traffic to Generative AI apps using the Netskope predefined category but this can result in a high amount of blocks as more and more tools build integrations with AI apps like ChatGPT. However, as I mentioned this is a rather heavy handed approach. What if I want my users to have access but I worry about them putting sensitive data into Chat GPT? A traditional proxy doesn’t have an understanding of activities beyond browse, upload, and download so you must either allow or block the activity or rely on third party DLP solutions.

The Slightly Less Traditional Approach: Coach the Users on Using ChatGPT

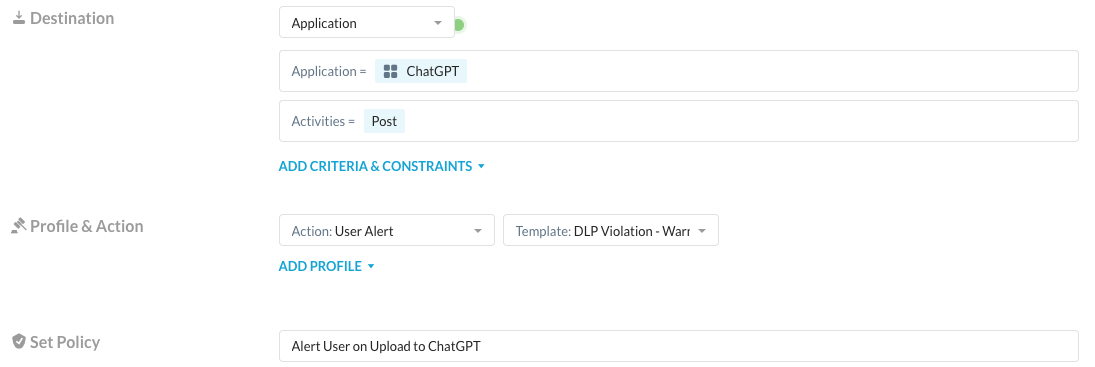

We can take the previous approach and fine tune it a bit to allow users access to ChatGPT but coach them prior to them posting a message. To do this, create a policy that generates a User Alert notification and requires the user to confirm their action.

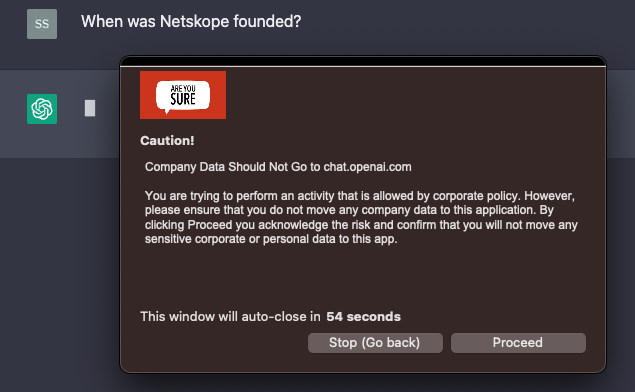

Now my user is allowed to browse to ChatGPT but when they post, they are prompted to accept the risk and confirm that they are going to appropriately handle data:

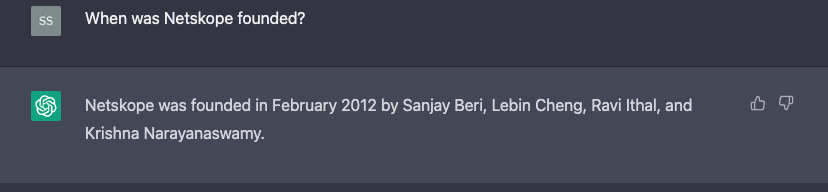

Once they click Proceed, ChatGPT completes the response:

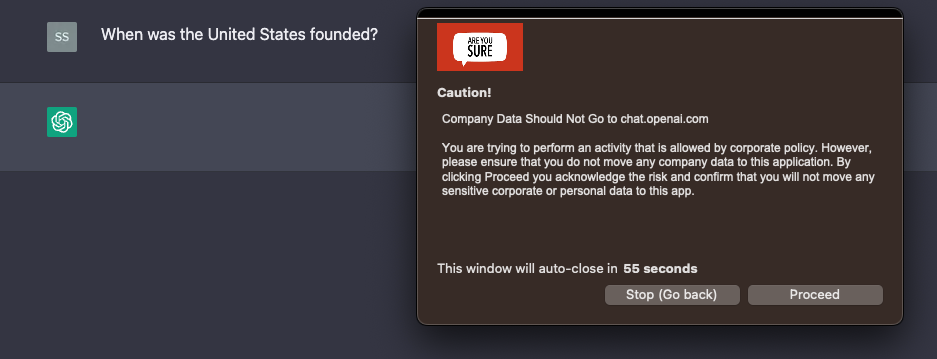

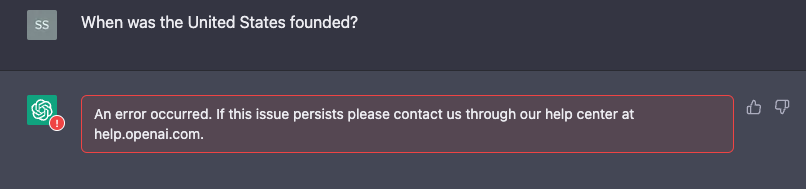

If they click Stop, then the action is blocked and no response is received:

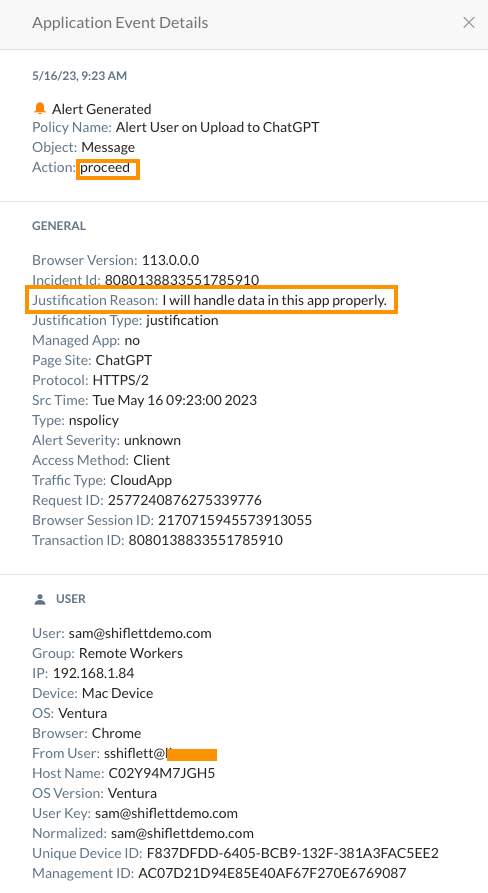

As an administrator, you get detailed logs of whether the user proceeded or stopped along with their justification reason if provided. These logs also include the username they are logged into ChatGPT with even if it's not their corporate account.

Keep in mind that Netskope will log all activities within ChatGPT even without a policy so you can see the utilization, number of posts, who is logging in, and more. I can also govern other activities.

The Next-Gen Secure Web Gateway Approach: Block Based on Data and Activity Context

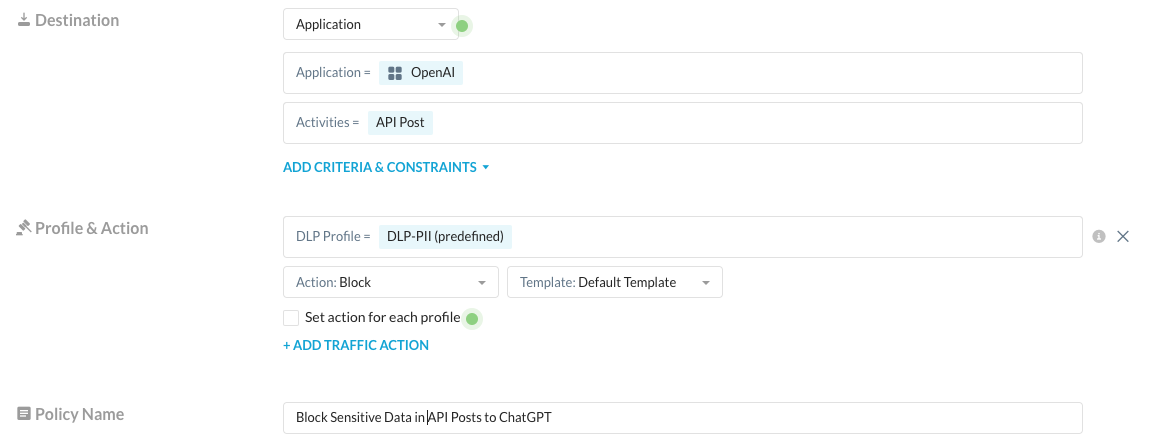

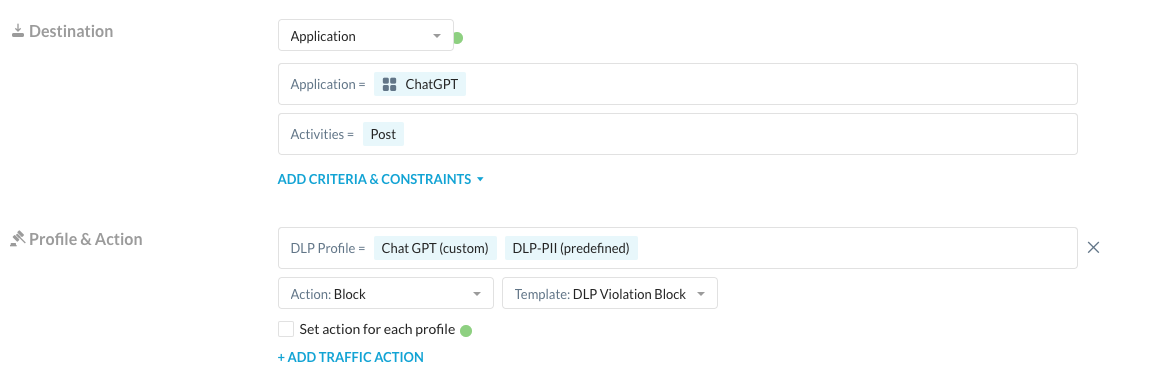

If the first approach was a hammer, then the Netskope approach is a scalpel in the hands of a skilled surgeon. Maybe we want our users to be able to use ChatGPT freely but we are still concerned about what they are posting there. After all, ChatGPT can be a powerful productivity tool for daily tasks even for the most technical employees. Because the Netskope Web Gateway has an understanding of activities within applications, I can create a policy to block Posts to ChatGPT that contain sensitive data:

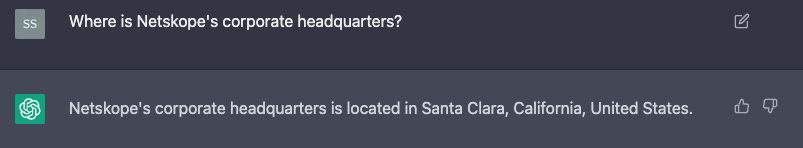

I have two DLP profiles. One is for any amount of PII and the other is for the string “ChatGPT Test String.” With the policy created, I can post harmless or non-sensitive data and get an answer:

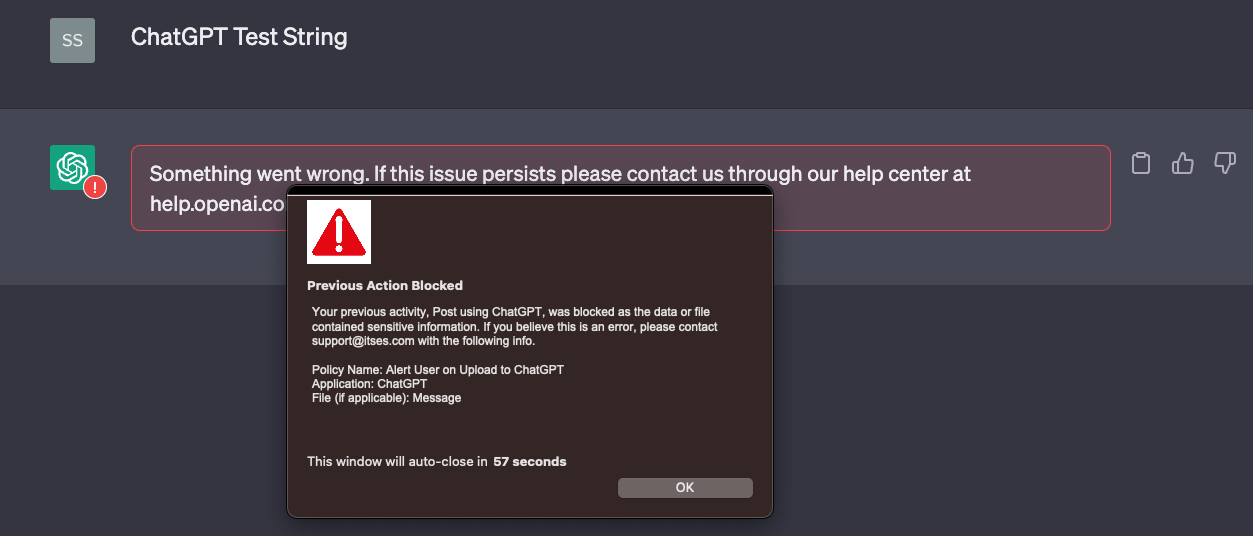

When I post the test string I get blocked by Netskope with a customizable block page:

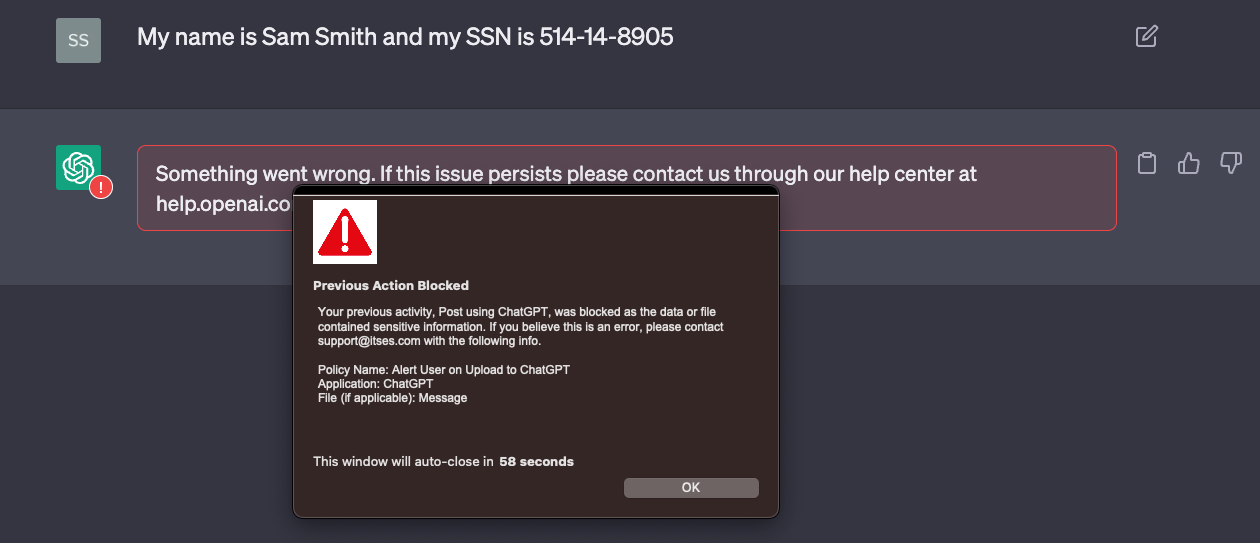

Now for the final test, let’s grab some PII data and try to post it to ChatGPT:

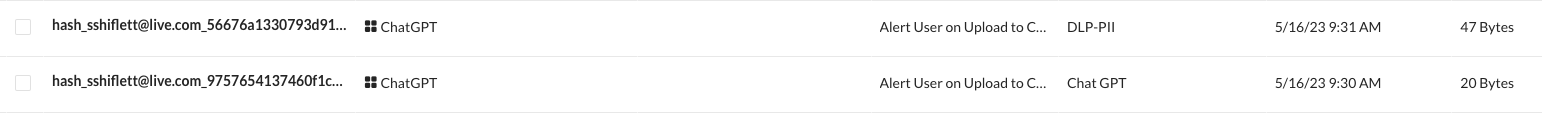

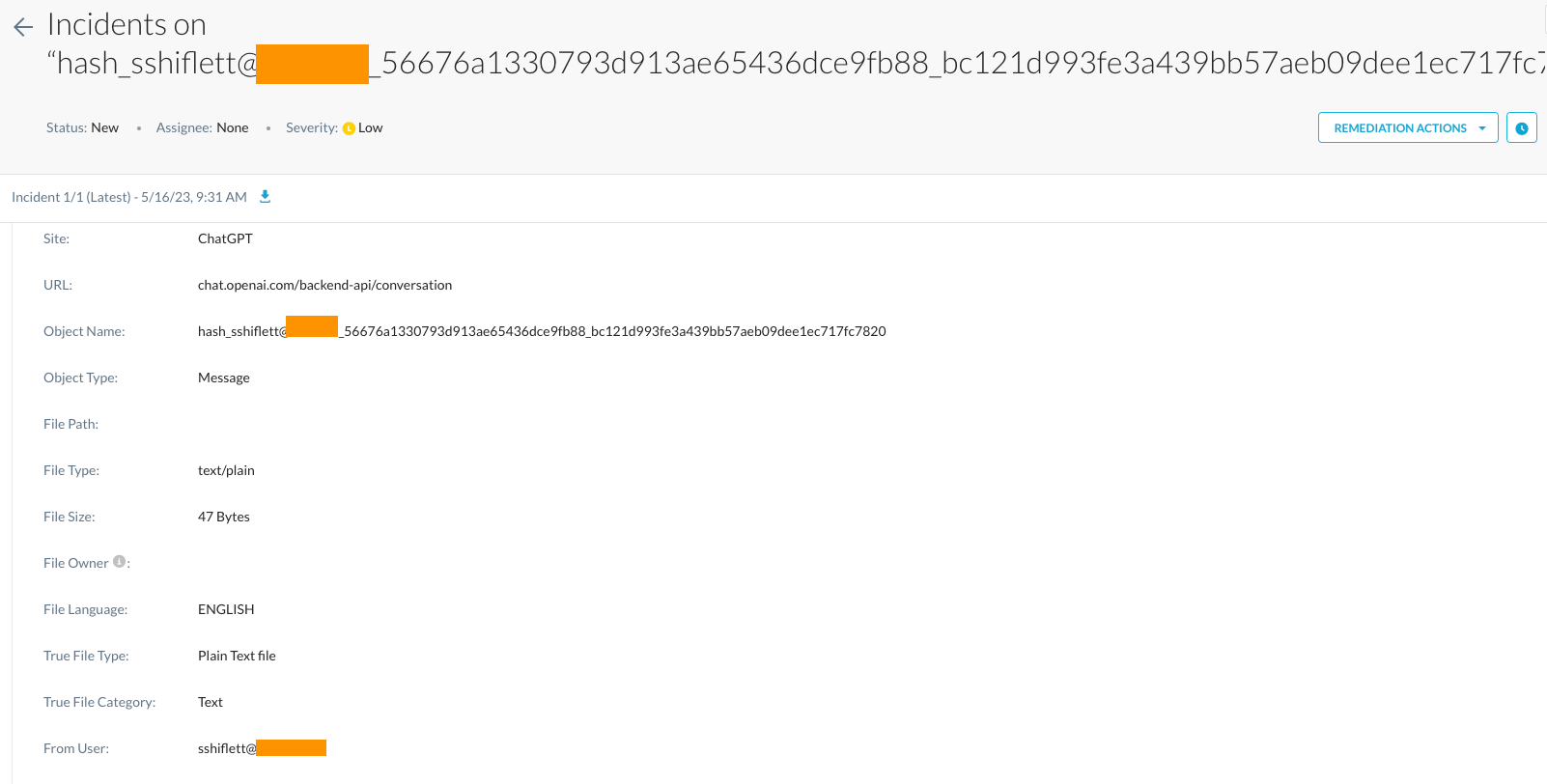

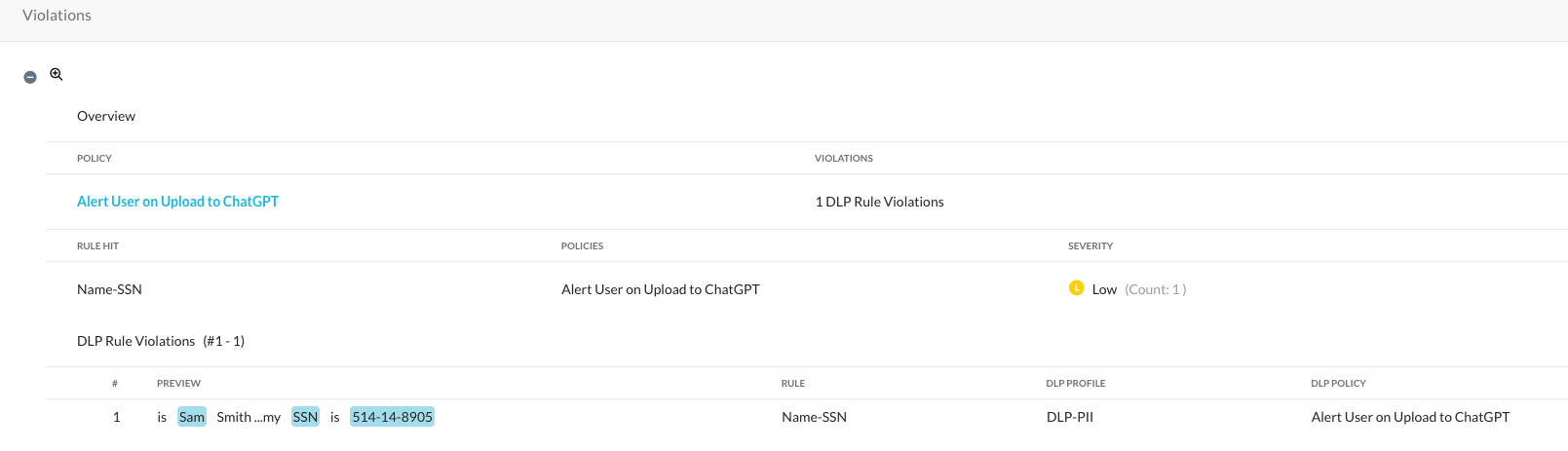

I once again get blocked with a block message informing the user of the violation. The data they tried to upload will be logged via Netskope’s Incident Management and Forensics for administrators to review as well. If we take a look at that, Netskope records a number of key forensic details on each violation:

This includes forensics on the actual data that triggered the violation, the user, application, and the username that was used in ChatGPT itself:

Limit Post Size in ChatGPT

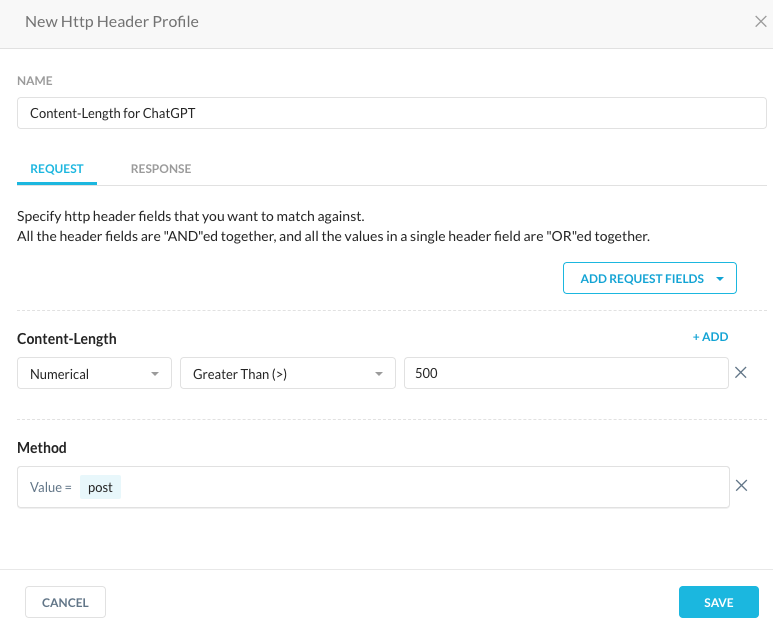

I also want to add one more control that many Netskope administrators have used. In addition to the DLP controls mentioned above, we can limit the size of posts allowed to ChatGPT by creating an HTTP header profile that limits the content length of POST activities:

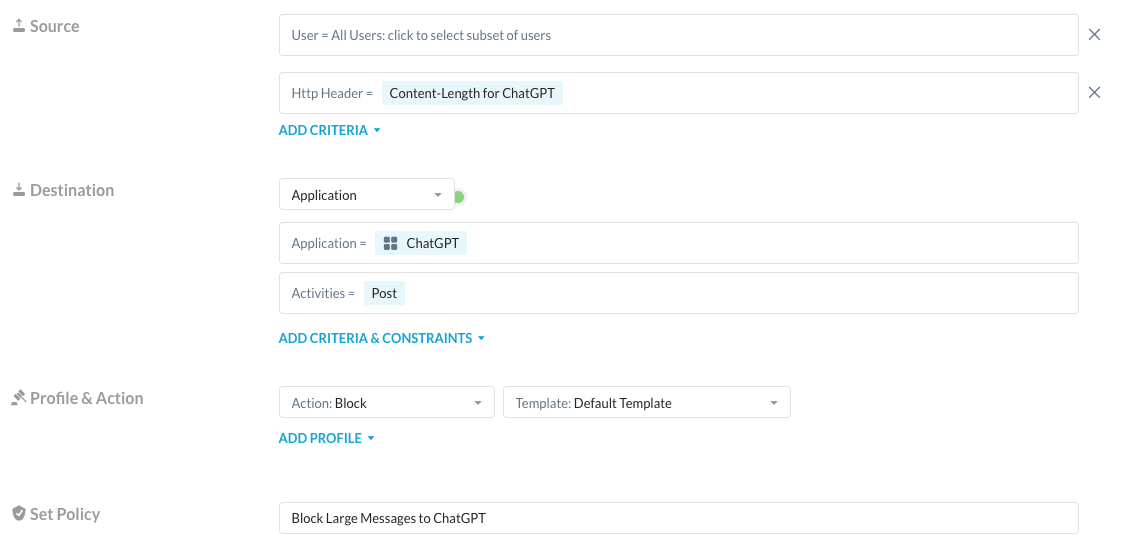

You can then create a policy that applies this header profile to the POST activity within ChatGPT:

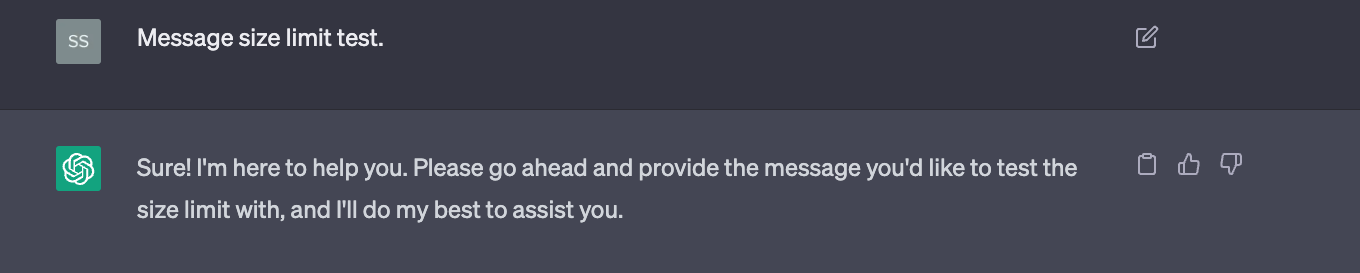

Keep in mind, you may need to adjust the content-length maximum to your organization's requirements. The "500" I've currently selected is a relatively small limit for demo purposes. When I post a smaller string of text, I'm allowed to post it:

However, when I enter a larger string, I'm blocked.

Controlling Logins to ChatGPT

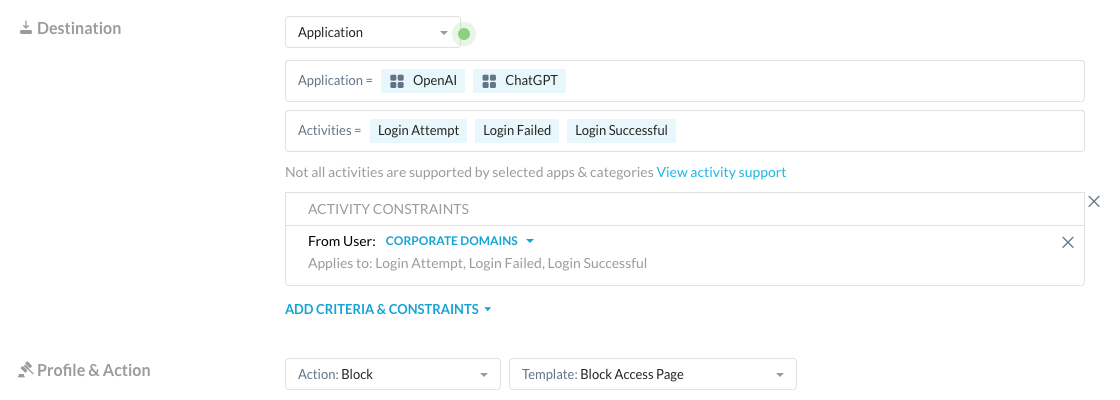

An additional capability of the Netskope Next-Gen Secure Web Gateway is contextual controls around login activities. This applies to hundreds of applications including ChatGPT and OpenAI. You can control these login activities to restrict users to utilize corporate usernames and block personal account logins such as Gmail. For example, if you want to ensure users only use your corporate domain, you can create a user constraint that does not match your corporate domains:

You can then create a Real-time Protection policy to use this constraint profile to block access from domains that don't match your corporate domains:

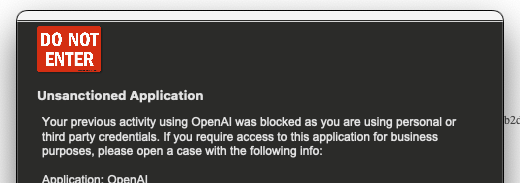

Now when I attempt to signin using my personal credentials, I receive a message informing me that I can't use personal credentials for this app:

May 2023 Update

Previous versions of this post utilized custom URL lists and a custom app definition for ChatGPT controls. These are no longer necessary as Netskope has a predefined app connector for ChatGPT. If you were using the previous method, you can replace the custom app connector in Real-time Protection Policy with the Netskope app connector and delete the custom connector.

January 2024 Update

With release 111, Netskope has introduced changes to the ChatGPT controls. These changes are documented here. This article has been updated to reflect the required changes to govern ChatGPT. As of R111, Login activity detection is based on if the login is via a federated provider or the native OpenAI login.

- Federated logins via Google, Microsoft, and Apple will be governed and detected as ChatGPT.

- Native OpenAI logins will be governed and detected as OpenAI.

Netskope also introduced support for API based activity to OpenAI to prevent data exfiltration via API tools such as Postman. You can scope DLP and access control policies via the API using a Real-time Protection policy scoped to OpenAI with the API Post activity: