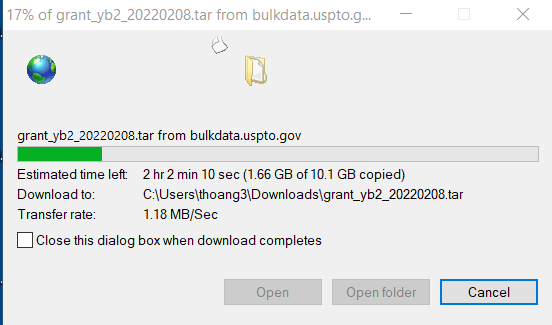

Is anyone else having issues with accessing private storage through NPA (private access) becoming extremely slow? We are mostly a windows shop and a large portion of our end users are having an issue where when they move any file from one of our Netapp on prem storages or even a Windows based file server the speeds are insanely slow when users are using ZTNA. It seems like this mainly affects transfers of lots of small files vs large single files. With the client uninstalled and the user back on our traditional VPN we get about what we would expect (a few megabits a second depending on the end users internet speed). Enabling the client puts us down to ancient baud modem level speeds - sometimes as low as 300 bits a second!

Sign up

Already have an account? Login

Sign in or register securely using Single Sign-On (SSO)

Employee Continue as Customer / Partner (Login or Create Account)Login to the community

Sign in or register securely using Single Sign-On (SSO)

Employee Continue as Customer / Partner (Login or Create Account)Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.