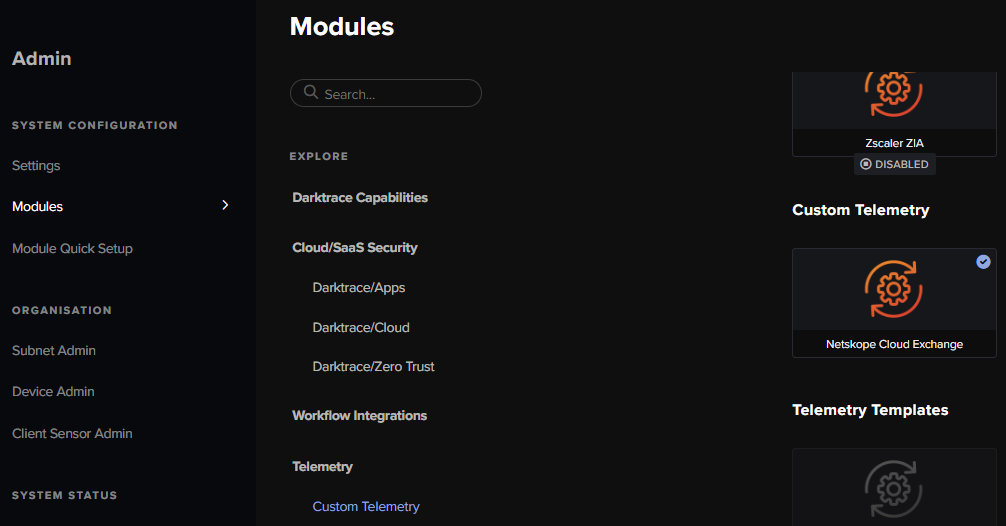

Has anyone had any luck with Syslog integration from Cloud Exchange into Darktrace?

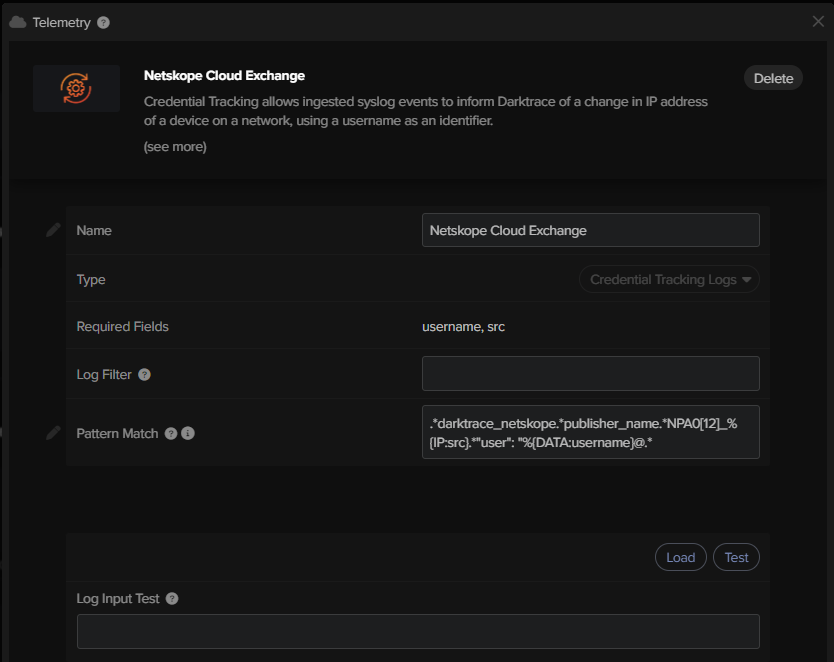

Darktrace describes the expected format like this:

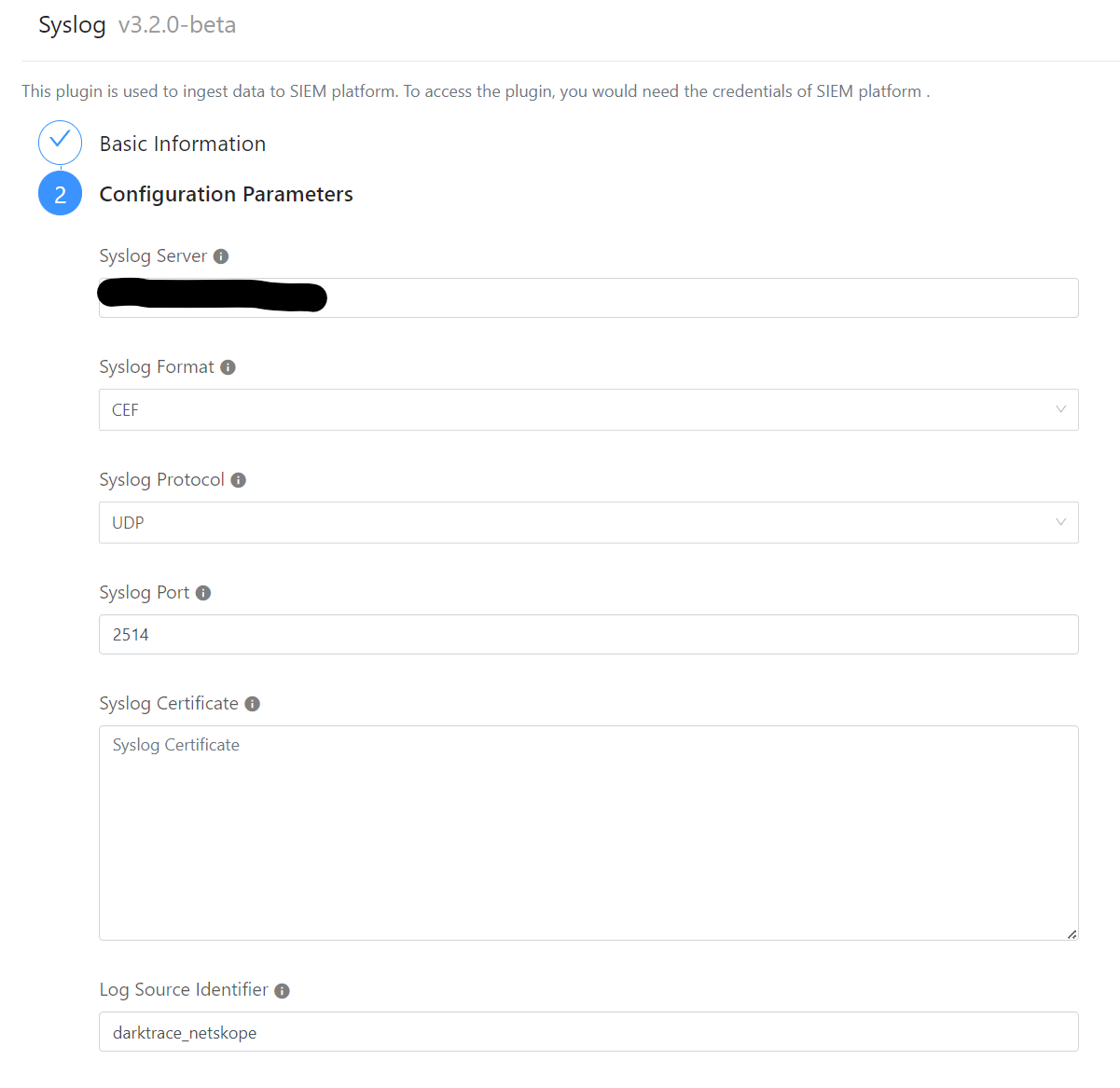

Darktrace expects the Netskope Web Gateway data to include the string "darktrace_netskope" followed by a JSON formatted representation of the Netskope logging. The order of fields within the JSON is not important. For example: darktrace_netskope {"src_time": "Fri Jan 1 00:10:00 2021","userkey": "user.name@company.com","dst_region": "Region","category": "Business","src_longitude": 52.2,"transaction_id": 0,"ur_normalized": "user.name@company.com","src_latitude": 0.12,"dst_longitude": 52.2,"domain": "www.example.org","dst_zipcode": "555555","access_method": "Client","src_timezone": "Global/UTC","ccl": "unknown","bypass_reason": "SSL policy matched","user_generated": "yes","dst_country": "ZZ","srcip": "198.51.100.1","site": "example","traffic_type": "Web","src_region": "Region","user": "user.name@example.org","appcategory": "Business","page_id": 0,"insertion_epoch_timestamp": 1604614281,"bypass_traffic": "yes","dst_location": "Region","count": 1,"src_location": "Location","url": "www.example.org","src_country": "ZZ","internal_id": "21c3e4368567eae234d211de","dst_latitude": 0.12,"dst_timezone": "Global/UTC","policy": "Bypass","type": "page","ssl_decrypt_policy": "yes","src_zipcode": "555555","dstip": "198.51.100.2","timestamp": 1604614213,"page": "www.example.or","dstport": 443,"userip": "192.168.1.1","organization_unit": ""}

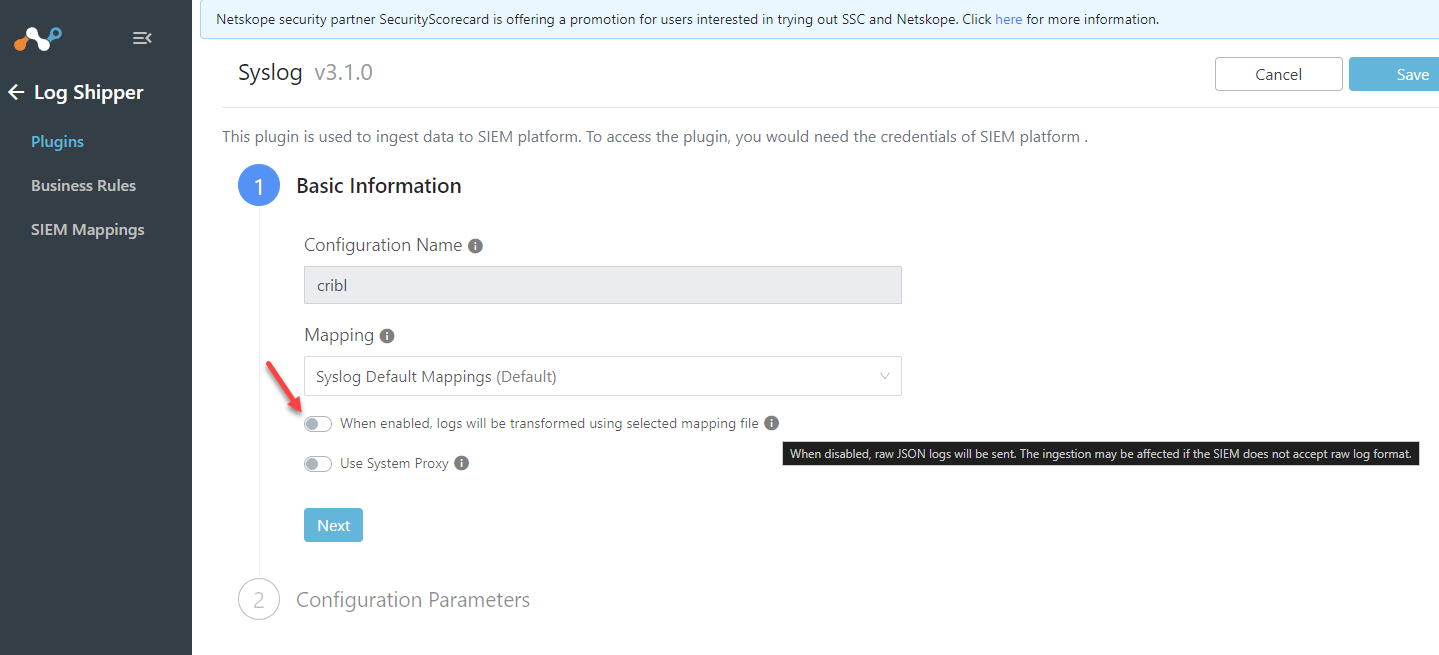

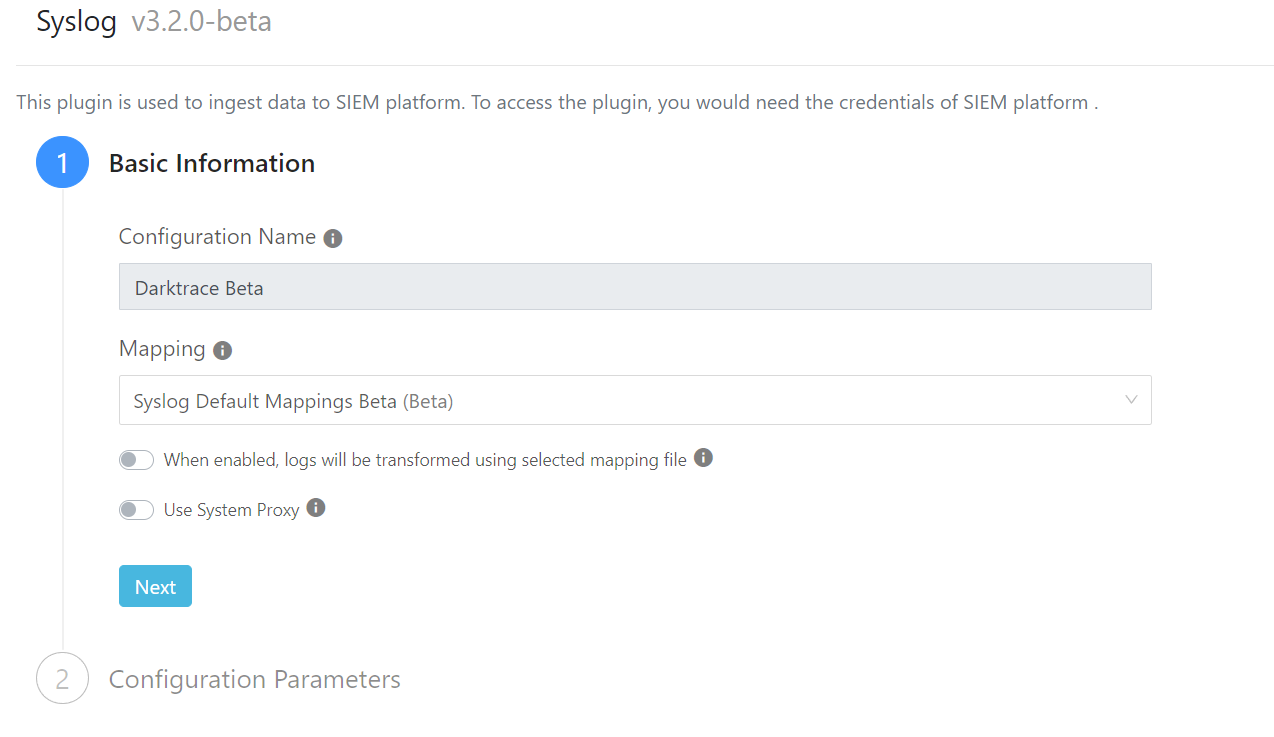

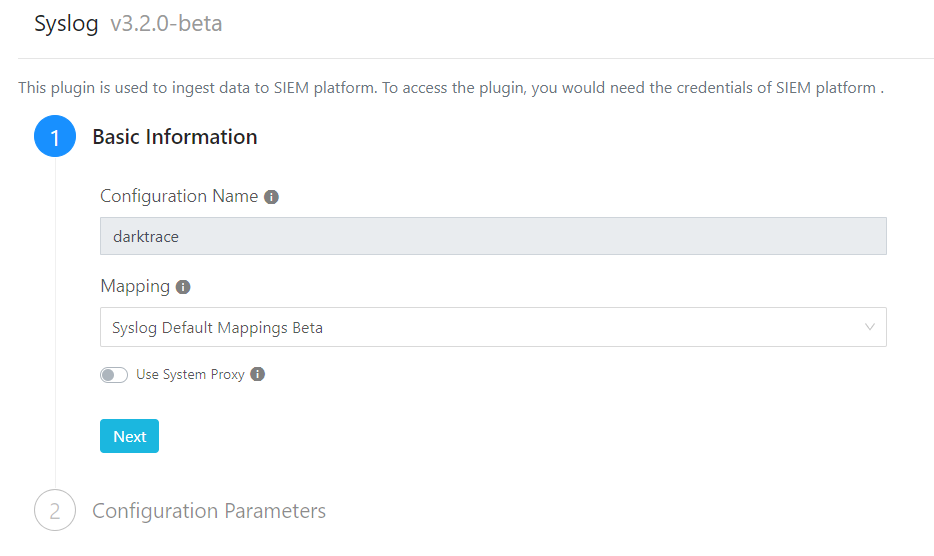

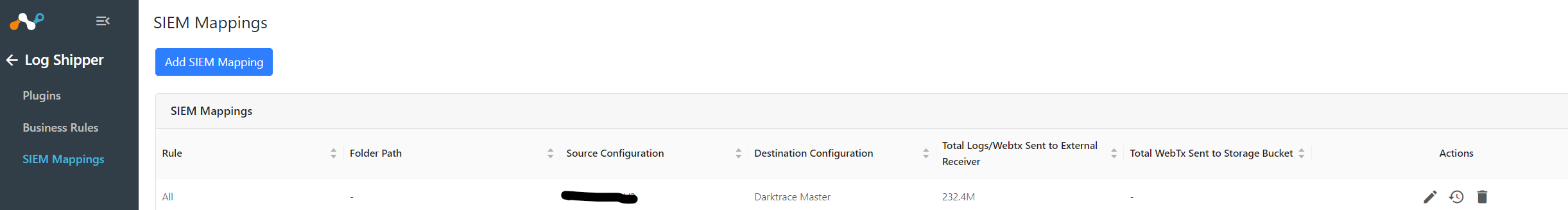

I have managed to use the Syslog plugin in Cloud Exchange to send the raw JSON files, by disabling the CEF mapping, but by doing that I loose the Log Source Identifier “darktrace_netskope” which I have configured, and Darktrace doesn’t pickup the logs.