The Blank Page Problem in Security Testing

Every security assessment begins with a mountain of documentation and a crucial question: "Where would an attacker start?" For testers, translating functional specs, architectural diagrams, and API details into a concrete set of high-impact security tests is one of the most challenging and time-consuming parts of the job. It's a process that relies heavily on experience, intuition, and a meticulous, almost paranoid, mindset.

The "blank page" problem is real. You can spend hours sifting through documents, trying to connect the dots between a new feature and a potential vulnerability. It’s easy to get lost in the details, miss a subtle logic flaw, or simply run out of time and fall back on a generic checklist. I realized that what we needed wasn't just another tool, but a partner—a virtual expert that could do the heavy lifting of analysis and brainstorming, allowing testers to focus on verification and exploitation. This led me to create the AbuseCaseGen Gem, a specialized AI designed to operate as a virtual Principal Security Engineer.

Shifting from Manual Toil to Assisted Analysis

The primary goal of the AbuseCaseGen Gem is to support and assist testers during the crucial abuse case development phase. Instead of starting from scratch, a tester can provide the Gem with all the technical documentation they have. The Gem's job is to ingest this information and produce a structured, prioritized, and actionable list of security abuse cases that are directly relevant to the feature being tested.

This isn't about replacing the security professional; it's about augmenting their capabilities. Think of it as having a senior engineer on your team whose sole focus is to read the documentation, ask the right questions, and brainstorm potential attack vectors based on established security standards. This frees up the human tester to apply their creativity and critical thinking to the more nuanced aspects of the assessment.

How It Works: A Human-in-the-Loop Collaboration

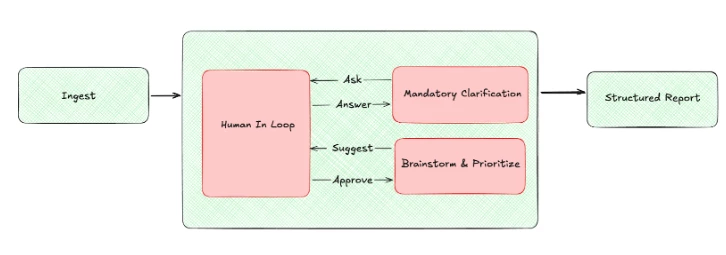

To ensure the Gem produced high-quality, reliable results, I built its workflow around a Human-in-the-Loop (HITL) model. This approach combines the systematic analysis of AI with the critical judgment of a security expert. It's not a black box spitting out random vulnerabilities; it's a collaborative process grounded in principles I'd expect from a human expert: meticulous analysis, zero assumptions, and a clear focus on impact.

Step 1: The "Zero Assumption" Mandate

The collaboration begins with a foundational principle: the Zero Assumption Policy. The Gem will never make assumptions about the architecture, technology stack, or functionality. This mandate is the trigger for human interaction, ensuring that every subsequent step is based on factual ground truth, not AI-driven guesswork.

Step 2: The Mandatory Clarification Step

If any detail is ambiguous or missing after the initial analysis, the Gem stops and initiates a dialogue. This is the Mandatory Clarification loop shown in the workflow. It prompts the human expert with specific questions, such as:

- "The documentation for the updateUserProfile API does not specify the authorization model. Is this endpoint accessible to any authenticated user, or only to the user whose profile is being modified?"

- "You've mentioned a data export feature. What data formats are supported, and are there rate limits on how often a user can perform an export?"

The expert provides the answers, resolving ambiguity and ensuring the AI's understanding is complete before it proceeds. This back-and-forth is critical for accuracy.

Step 3: Brainstorming Grounded in Reality & Prioritization

Once clarity is established, the process moves to the Brainstorm & Prioritize loop..

- Suggest: The Gem systematically cross-references the feature's components with knowledge bases like OWASP and CWE to generate and suggest an extensive list of potential abuse cases. For example, if it identifies a file upload feature, it will immediately correlate that with CWE-434 (Unrestricted Upload of File with Dangerous Type). This isn't a simple keyword match. It analyzes the context provided in the documents to draft an initial, extensive list of potential abuse cases.

- Approve: This list is presented to the human expert, who uses their intuition and business context to refine, approve, and prioritize the suggestions. The AI handles the breadth of brainstorming, while the human provides the depth of expert judgment, focusing on the 15-30 most relevant cases based on business logic, data exfiltration risk, and high-impact vulnerabilities.

An unprioritized list of 100 potential issues is just noise. The real value comes from focus. The Gem rigorously filters its initial list down to the 15-30 most relevant cases using a clear prioritization scheme:

- Priority 1: Business Logic & Fraud: Attacks that exploit the intended workflow of the feature for financial gain or to cause business disruption.

- Priority 2: Data Exfiltration & Privacy Violations: Attacks focused on accessing or stealing sensitive user or company data.

- Priority 3: Common High-Impact Vulnerabilities: Classic but critical issues like Injection, Broken Authentication, and Server-Side Request Forgery (SSRF).

This ensures the final list highlights the threats that matter most to the business, rather than just low-impact technical findings.

The Final Output: A Structured and Actionable Report

The process culminates in a structured report that is designed to be directly usable by security and development teams. Each abuse case is detailed across seven specific fields, transforming a simple idea into a trackable work item.

- Abuse Case Unique Id: ABUSE_CASE_001

- Product Area Impacted: e.g., Authentication, Backend API

- Abuse Case's Attack Description: A concise, 2-4 sentence technical description.

- Netskope Defect Severity: Blocker, Critical, Major, Minor, or Trivial (based on CVSS).

- Abuse Case Category: e.g., Business Logic, Input Validation, Data Exfiltration.

- Counter-measure Applicable: A recommended mitigation strategy.

- Handling Decision: A placeholder status (e.g., To Address, Risk Accepted).

Conclusion: Your Turn to Build

Creating the AbuseCaseGen Gem has significantly streamlined our initial security assessment phase, allowing us to generate a high-quality baseline of test cases in a fraction of the time. It has proven that by combining the analytical power of AI with the expertise of a security professional, we can create powerful tools that elevate our work.

The principles behind this Gem—zero assumptions, mandatory clarification, and structured output—are not limited to AI. They are the hallmarks of any good security analysis.

So, here is my call to action for you: How can you apply this model to your own workflow? Think about the most repetitive, time-consuming parts of your job. Could a specialized AI assistant, guided by a strong and specific prompt, help you automate the toil and free you up to focus on what humans do best: thinking critically and creatively? I encourage you to experiment and share what you build. The future of security engineering is not about being replaced by AI, but by being empowered by it.