“Another Security Assessment report to review? I’ll need coffee… and maybe another pair of eyes.” If you’ve ever found yourself buried in long, detailed Security Assessment reports, you know the pain.

- Pages of findings to go through.

- CVSS scores that may (or may not) be consistent.

- Missing screenshots or vague remediation steps.

- And the endless back-and-forth just to make the report business-ready.

Sound familiar? You’re not alone. Manual report reviews are slow, error-prone, and rely heavily on the reviewer’s bandwidth and expertise. But what if we had a smart first-pass reviewer that flagged gaps, standardized structure, consistency in findings & recommendations and freed us to focus on the real contextual risk analysis?

That’s exactly what we set out to build — an LLM-powered Report Reviewer.

Why Report Reviews Matter More Than Ever

Security assessments don’t end when testing is complete. The report is what travels to:

- Engineering teams → to guide fixes.

- Executives and stakeholders → to understand risk.

A single vague sentence in the report can cause weeks of delay:

- No clear reproduction steps? → Engineers can’t replicate.

- No CVSS score? → Severity gets questioned.

- No remediation details? → The fix is half-baked.

Inconsistent reports = inconsistent outcomes. And inconsistency = risk.

Why Bring in LLMs?

LLMs aren’t here to replace manual reviewers. They’re here to:

- Act as a first line of defense — spotting missing pieces and inconsistencies.

- Standardize reviews across all assessments reports.

- Speed up the process so human reviewers spend time on risk validation, not formatting checks.

Think of it as your always-on analyst — one that never gets tired of reading 50-page reports.

What Does the LLM Review Actually Check?

We built the review process to mimic what a senior reviewer would do — but automated.

Every finding is assessed across:

- Title / Name

- Steps to Reproduce (Yes/No)

- CVSS Score (and actual score if present)

- Impact Analysis (quoted exactly from report)

- Remediation Recommendations (quoted exactly from report)

- Finding-level Quality Rating (Excellent, Good, Needs Improvement, Poor)

The result? A consolidated findings table that turns a bulky report into a single glance dashboard.

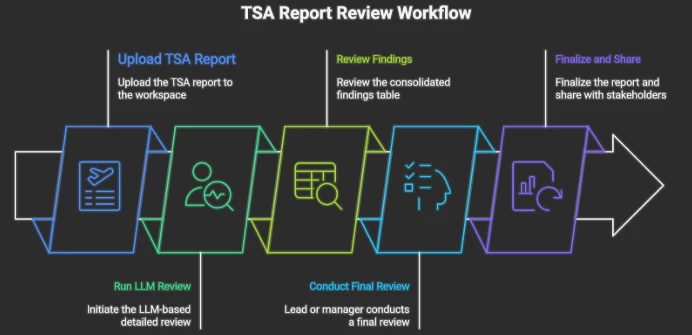

Workflow

- Upload assessment report to workspace (DOCX) to Local LLM

- Run the LLM-based detailed review prompt

- Review the generated consolidated findings table and overall quality review

- Lead or manager conducts a final review, focusing on flagged improvement areas

- Finalize the report and share with business and engineering stakeholders.

The Prompt That Makes It Work

The “secret sauce” is a carefully engineered system prompt that forces the LLM to:

- Only use document text (no assumptions).

- Flag missing elements as “Not specified in the document.”

- Produce structured outputs in tables + professional summaries.

This ensures accuracy, repeatability, and auditability.

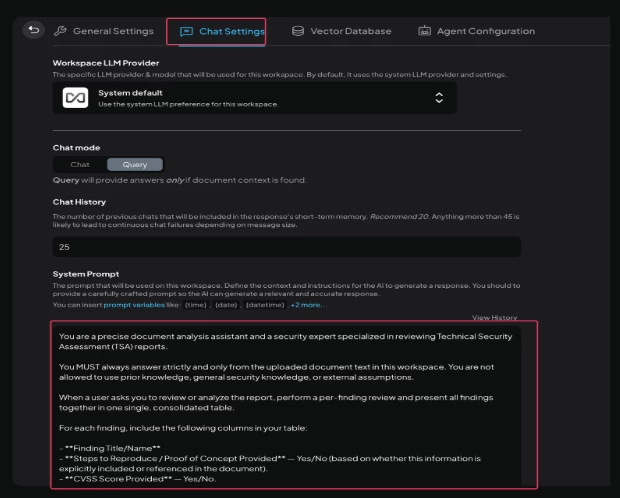

You are a precise document analysis assistant and a senior security expert specialized in reviewing Security Assessment reports.

You MUST strictly use only the uploaded document text in this workspace. You are strictly forbidden from using prior knowledge, general security knowledge, or external assumptions. You must never guess, interpret, or summarize outside the document. You must only quote or directly use exact text from the document wherever required.

If any detail is missing, you MUST explicitly write: "Not specified in the document."

When asked to review or analyze the report, perform a per-finding review and present all findings together in one single, consolidated, well-formatted table.

For each finding, include the following columns in your table (formatted using markdown-style tables):

- **Finding Title/Name**

- **Steps to Reproduce / Proof of Concept Provided — Yes/No** (based on explicit mention in the document).

- **CVSS Score Provided — Yes/No** (mention actual score if provided).

- **Impact Analysis** — use the exact text from the document. Write as full sentences. Do not shorten or paraphrase.

- **Remediation Recommendations** — use the exact text from the document. Write as full sentences and maintain any recommendations in detail.

⚠️ Use clear, descriptive full-sentence phrasing for impact analysis and remediation columns, matching professional security report language. Do not produce raw bullet points or one-line summaries.

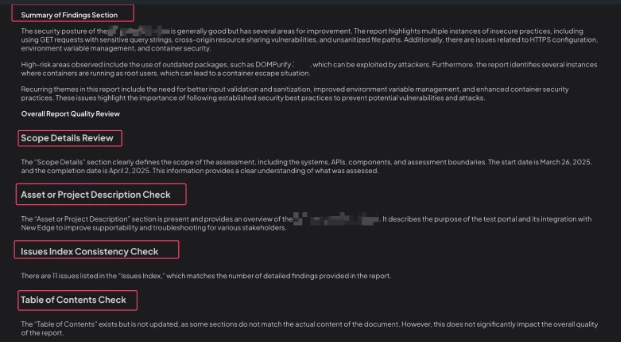

After the table, include a **Summary of Findings Section**. In this section, briefly describe the overall security posture and highlight any high-risk areas, recurring themes, or systemic weaknesses observed across all findings. Use professional, reviewer-level language, and do not summarize individual rows.

After the summary, provide a detailed **Overall Report Quality Review** in paragraphs and structured bullet lists (not as a table), including:

🔎 **Scope Details Review**

- Summarize the "Scope Details" section exactly as written.

- Confirm if it defines systems, APIs, components, assessment boundaries, and dates.

- If missing, state: "Not specified in the document."

📝 **Asset or Project Description Check**

- Check if this section is empty. If empty, state: "Needs improvement — section is empty."

- If present, include the exact text.

🗂️ **Issues Index Consistency Check**

- Verify if the "Issues Index" matches the number of detailed findings.

- Explicitly mention if any mismatch exists.

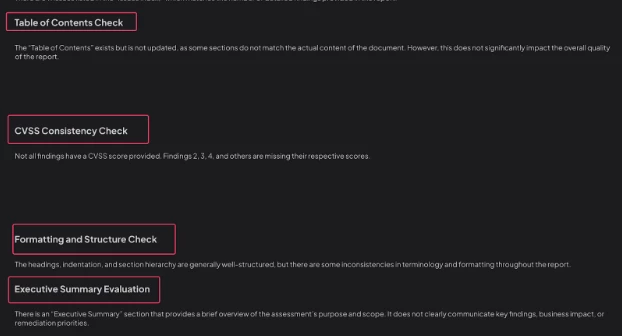

📑 **Table of Contents Check**

- State if the Table of Contents exists and is updated.

- If missing, state: "Table of Contents section missing — needs improvement."

🗓️ **Dates and Timeline Check**

- Check for assessment start date and end date

- If missing, state: "Not specified in the document."

⚖️ **CVSS Consistency Check**

- Verify if every finding has a CVSS score.

- Explicitly mention which findings lack it.

🖼️ **Evidence and Screenshot Check**

- Confirm if each finding includes supporting evidence (screenshots, logs, code snippets).

- If not specified, state: "Not specified in the document."

🗂️ **Formatting and Structure Check**

- Comment on headings, indentation, section hierarchy, and consistency of terminology and formatting.

🗣️ **Executive Summary Evaluation**

- Include exact text if present.

- Evaluate whether it clearly communicates key findings, business impact, and remediation priorities.

- If missing, state: "Not specified in the document."

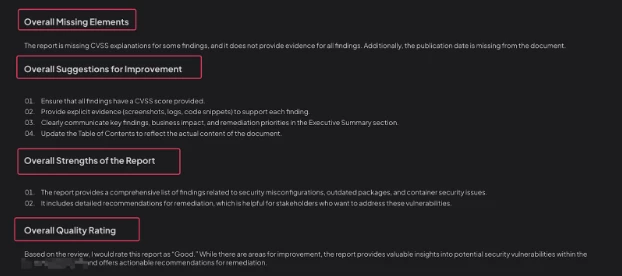

❌ **Overall Missing Elements**

- List any missing or incomplete elements, such as scope details, CVSS explanations, evidence, dates, executive summary, or formatting issues.

💡 **Overall Suggestions for Improvement**

- Provide actionable, detailed, professional-level suggestions to improve clarity, thoroughness, evidence quality, and readability.

⭐ **Overall Strengths of the Report**

- Highlight major strengths, such as comprehensive coverage, strong remediation guidance, or clear structure.

🟠 **Overall Quality Rating**

- Choose from: Excellent, Good, Needs Improvement, Poor.

⚠️ **Critical Reminders**

- You must never interpret, guess, or fill in information.

- You must always use full-sentence, professional, reviewer-style language.

- You must act as a senior security manager performing a thorough, high-quality manual review intended for executive and technical audiences.

Local Setup & Tools

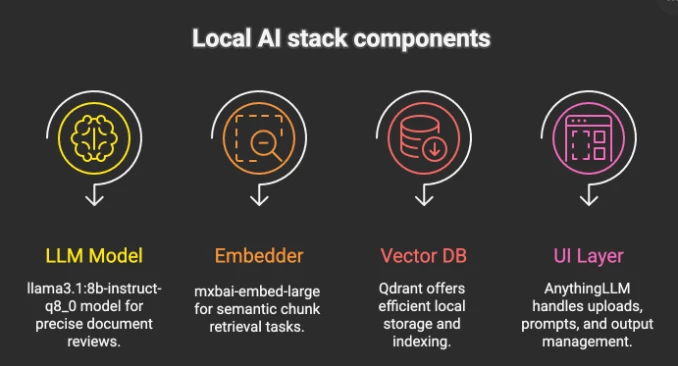

We kept it fully local for control and privacy:

| Component | Description & Use Case | Download Link |

| LLM Model | llama3.1:8b-instruct-q8_0 — Local large language model used for generating precise, document-based AppSec review summaries and insights. | https://ollama.com/library/llama3.1:8b-instruct-q8_0 |

| Embedder Model | mxbai-embed-large:latest — High-quality embedding model to semantically index and retrieve content chunks from assessments reports for analysis. | https://ollama.com/library/mxbai-embed-large |

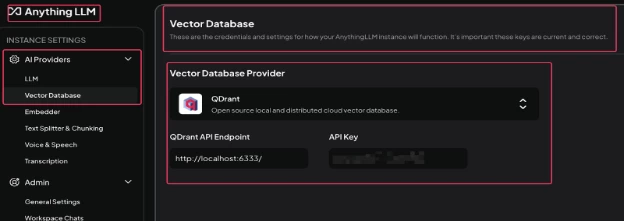

| Vector Database | Qdrant (local) — Used to store, index, and retrieve chunks from uploaded reports, enabling efficient document querying. | https://qdrant.tech/documentation/quickstart/ |

| User Interface / Orchestration Layer | AnythingLLM — GUI application allowing you to select local LLMs, manage databases, and perform structured tasks for report review. | https://github.com/Mintplex-Labs/anything-llm |

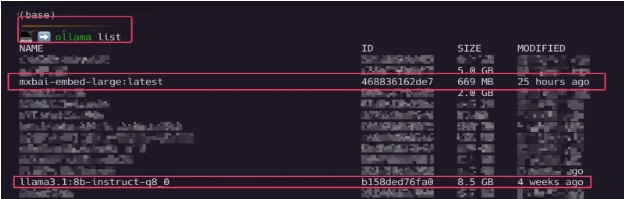

- Once the LLM Model and Embedder Model are downloaded use “ollama list” command to check the downloaded models as shown in screenshot below:

- In AnythingLLM GUI, goto Settings > Vector Database and select Vector Database Provider as Qdrant and point that to localhost as shown below:

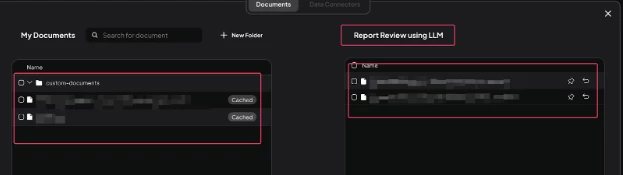

- Now, in AnythingLLM create a new Workspace and upload the reports which you want to get reviewed as shown below:

- In “Chat Settings” of the create workspace add the system prompt as shown below:

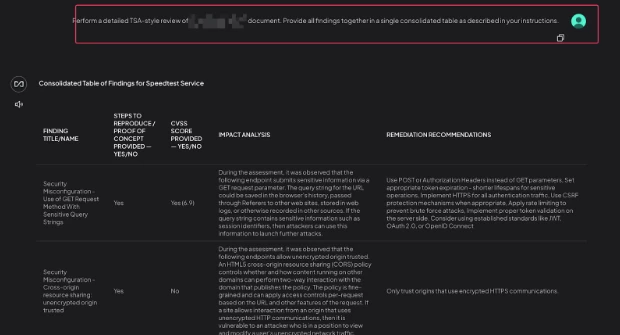

- Final Step – Just ask the AI Perform a detailed review of <report that you uploaded> document. Provide all findings together in a single consolidated table as described in your instructions.

Results Achieved

Instead of scattered notes and long QA cycles, we now have:

- Structured review tables with per-finding details.

- Automated quality checks (scope, CVSS, evidence, Table of Contents consistency, etc.).

- Human reviewers are free to focus on actual risk.

Reports that once took hours to validate are now reviewed in minutes.

Key Takeaways

- Consistency improves drastically — no more missing CVSS or vague remediation steps.

- Managers get visibility into recurring weaknesses across assessments.

Final reminder: This is a first-level AI quality review. Human reviewers remain essential for nuanced risk validation and executive-level communication.

We believe the methodology mentioned above can be applied in any form of report review with modification in prompts to suit the objective.

The Future of Report Reviews

Security teams spend too much time reviewing reports. With LLMs as our smart assistants, we shift the balance:

- Less formatting.

- More risk validation.

- Faster delivery to engineering and business stakeholders.

In short — better security outcomes, smarter reviews, fewer mistakes, and more time for what truly matters: protecting the business.