Generative AI is rapidly reshaping how we work. However, unsanctioned AI tools can create significant blind spots, leading to the potential loss of sensitive data. Security leaders are left with the critical challenge of discovering how to embrace the benefits of AI, without exposing the organization to risk.

We are going to cover the who, what, when, where, and how when it comes to discovering the use of generative AI in your organization and how to ensure its safe use with the enterprise.

Rapidly Reshaping Work

The rapid integration of Generative AI into our daily workflows marks a pivotal moment in enterprise technology. These powerful tools are no longer niche innovations but have become standard, expected features within the products and services we rely on, promising unprecedented gains in productivity and creativity. As we embrace this transformative wave, however, we must also recognize that this new frontier comes with inherent challenges. The very nature of AI tools, with their ability to process and generate vast amounts of information, can create significant blind spots for security and data protection teams, introducing new vectors for the potential loss of sensitive data, whether through sanctioned or unsanctioned use.

Of course, the evolution from obscure to everyday generative AI solutions has been rapid and transformative. Here are some examples of that progression:

From Obscure/Uncommon Solutions:

- Early Chatbots (e.g., ELIZA, 1960s)1

These were some of the first examples of text generation. They operated on simple pattern matching and keyword recognition to mimic conversation, primarily within academic or research settings. They were a novelty and a proof-of-concept, not a practical daily tool. - Markov Chains for Text Generation2

In the early days of the internet, hobbyists and researchers used Markov chains to generate text that statistically resembled a source document. The output was often nonsensical and incoherent, making it a niche curiosity rather than a useful application. - Generative Adversarial Networks (GANs) in Research (mid-2010s)3

When first developed, GANs were a breakthrough in generating realistic images. However, their use was confined to the AI research community. Early results were low-resolution, often distorted, and required significant technical expertise to produce.

To Everyday, Commonplace Solutions:

- Email Autocomplete and Smart Compose

The simple text generation of early chatbots has evolved into the sophisticated predictive text models in services like Gmail. "Smart Compose" and "Smart Reply" generate entire context-aware sentences and responses, seamlessly integrating into the daily workflow of billions of users. - Digital Assistants and Modern Search Engines4

The conversational concept of ELIZA has fully matured into assistants like Siri, Google Assistant, and Alexa. Furthermore, generative AI is now at the core of search engines like Google and Bing, which provide summarized, conversational answers to complex queries, moving beyond a simple list of links. - Mainstream Creative and Design Tools

The niche research on GANs and diffusion models has directly led to popular tools like DALL-E, Midjourney, and Stable Diffusion. What once required a research lab is now available as a consumer application. This technology is also being embedded into standard software like Adobe Photoshop with its "Generative Fill" feature and Canva's "Magic Write,"5 making AI-powered content creation an everyday activity for professionals and casual users alike. - Personalized Recommendations and Content:

Streaming services like Netflix and Spotify use generative models6 not just to recommend what you might like, but to generate personalized playlist titles, descriptions, and even artwork, making the user experience feel uniquely tailored.

Navigating this new landscape requires a strategic shift in our security posture. The goal is not to stifle innovation or hinder the adoption of tools that can profoundly benefit the organization, but rather to enable their safe and effective use. This means proactively addressing the risks before they escalate into security breaches or privacy violations. To truly harness the power of AI without expanding our security risk footprint, we must first gain clear visibility into how these tools are being used across the enterprise. Only then can we effectively enforce robust data protection policies, prevent risky behaviors, and ensure that our adoption of artificial intelligence is both a competitive advantage and a secure one.

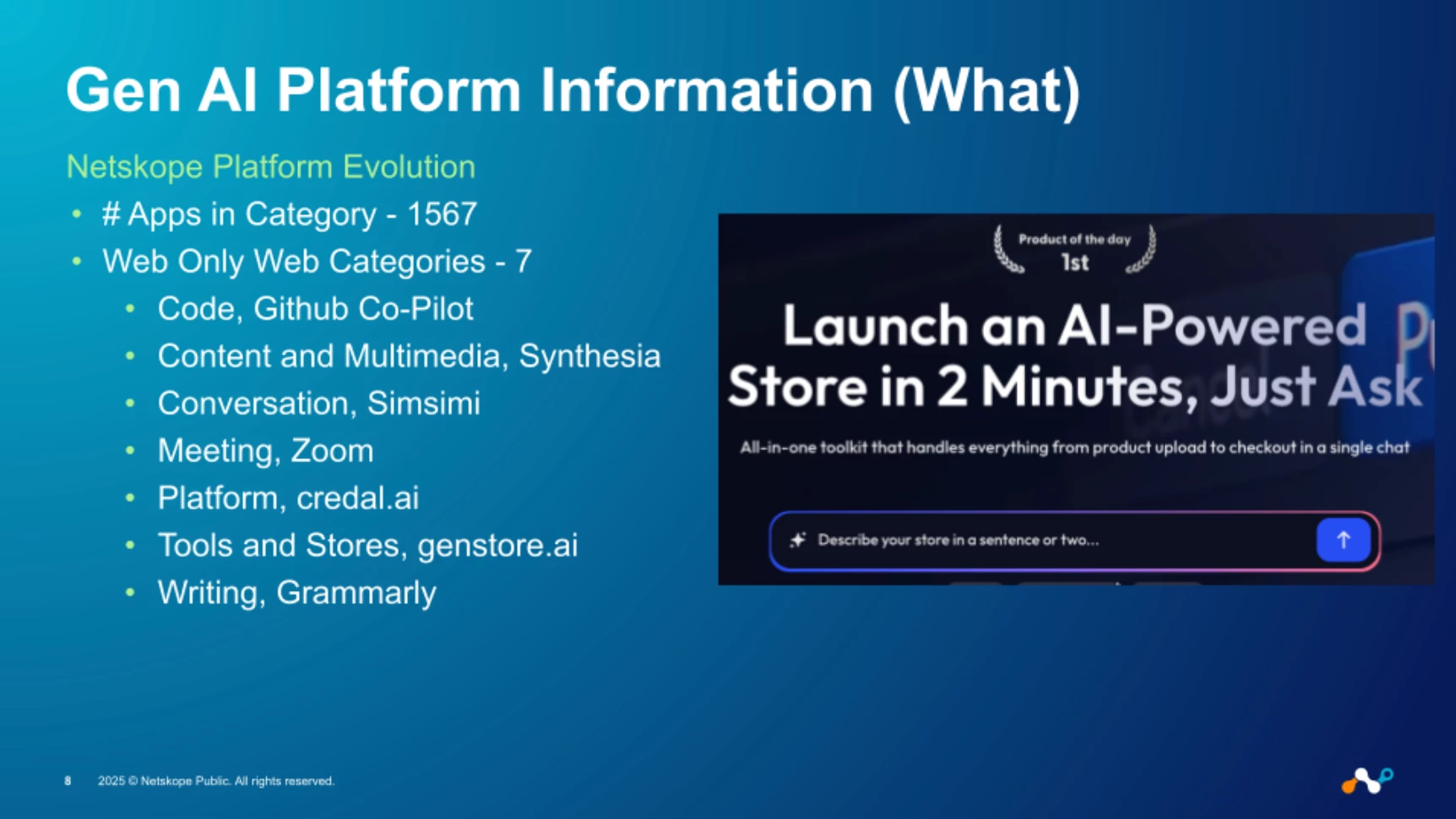

The What: Gen AI Platform Information

To effectively manage these risks, it's crucial to understand what this sprawling Gen AI ecosystem looks like from a security platform perspective. The challenge is no longer about managing a handful of applications; it's about gaining control over a massive, diverse, and exponentially growing category of tools—over 1,500 in our latest count. To provide meaningful control, we meticulously identify and categorize these applications based on their primary function—from Code assistants like GitHub Co-Pilot to Content and Multimedia tools like Synthesia. This classification enables a more intelligent, risk-based approach, allowing us to create nuanced security controls that protect sensitive data without impeding the productivity gains these tools offer.

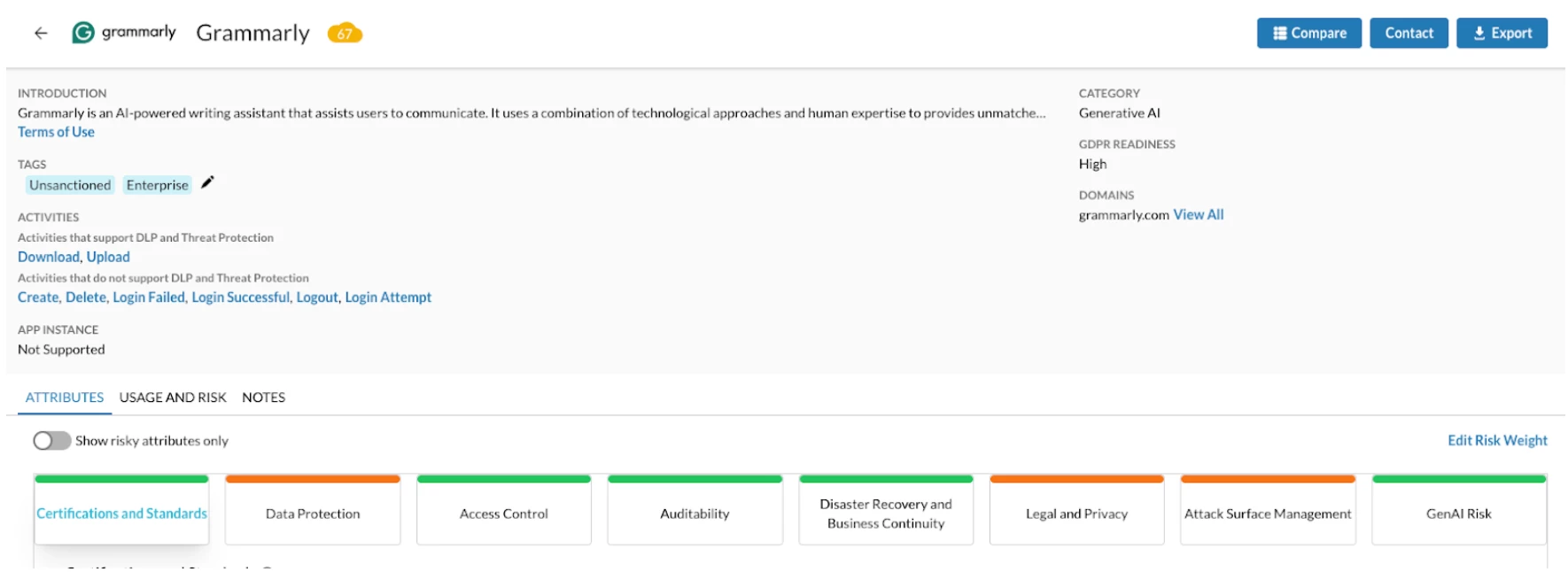

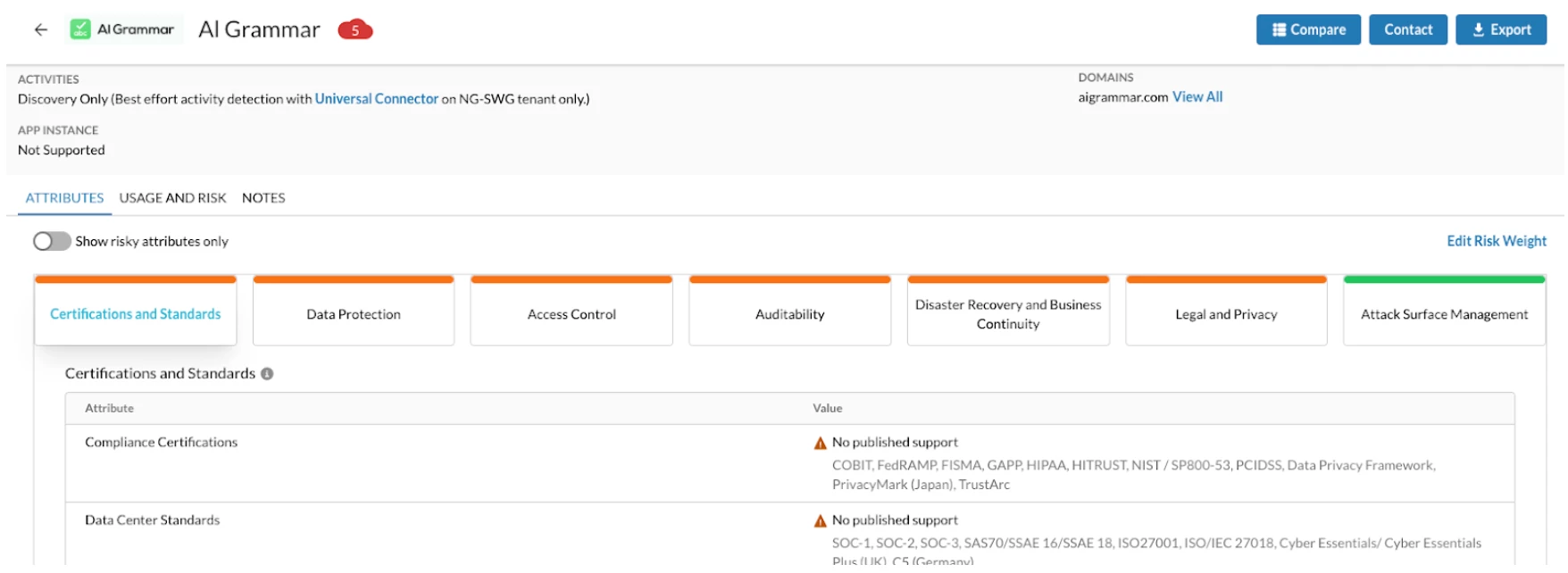

In response, our platform has had to evolve significantly to provide the necessary granularity and context. The first step is discovery and classification. To provide meaningful control, we meticulously identify and categorize these applications based on their primary function, allowing us to move beyond a generic "AI" label. This classification enables a more intelligent, risk-based approach to policy enforcement. By understanding whether an application is a writing tool like Grammarly, a code generator like GitHub Co-Pilot, or a full-fledged development platform, we can create and apply nuanced security controls that protect sensitive data without impeding the productivity gains these tools offer.

Prime examples of the evolution of AI Tools from a security perspective range from the obscure or uncommon to the everyday and pervasive usage.

Grammarly v. AI Grammar, one is more secure than the other but both have some risk associated with them.

The Who & What: Organizational Usage and Risk Assessment

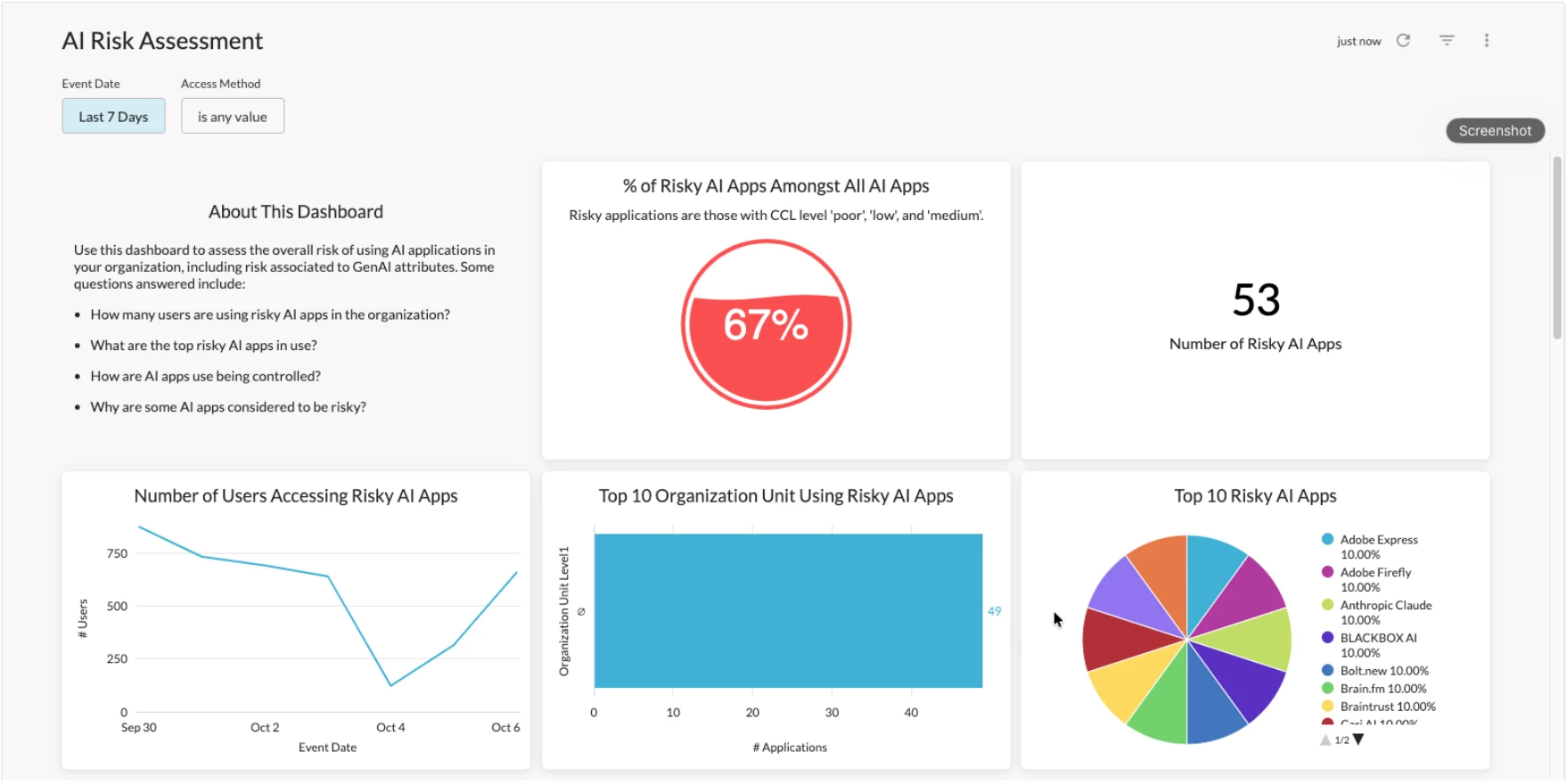

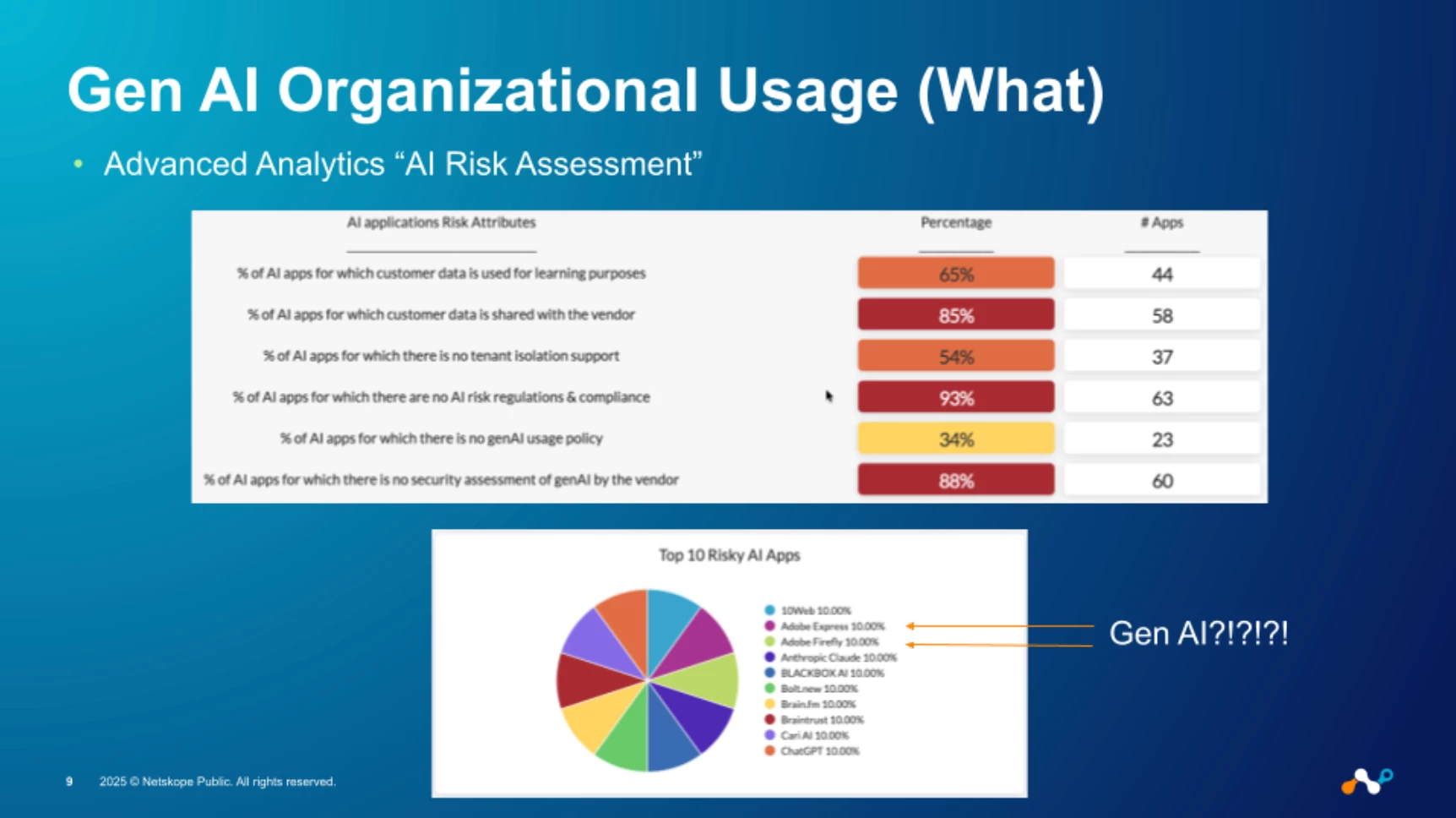

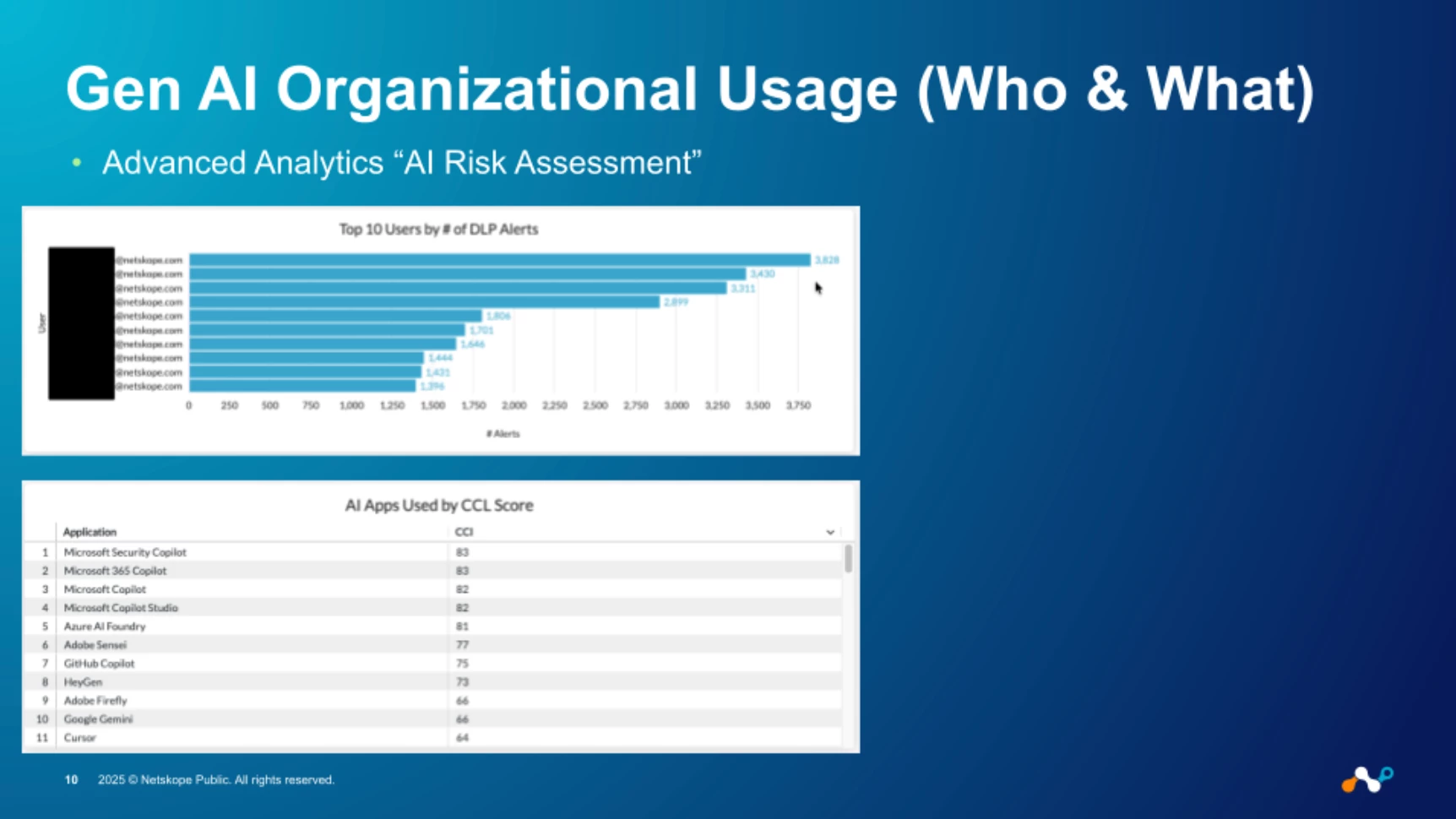

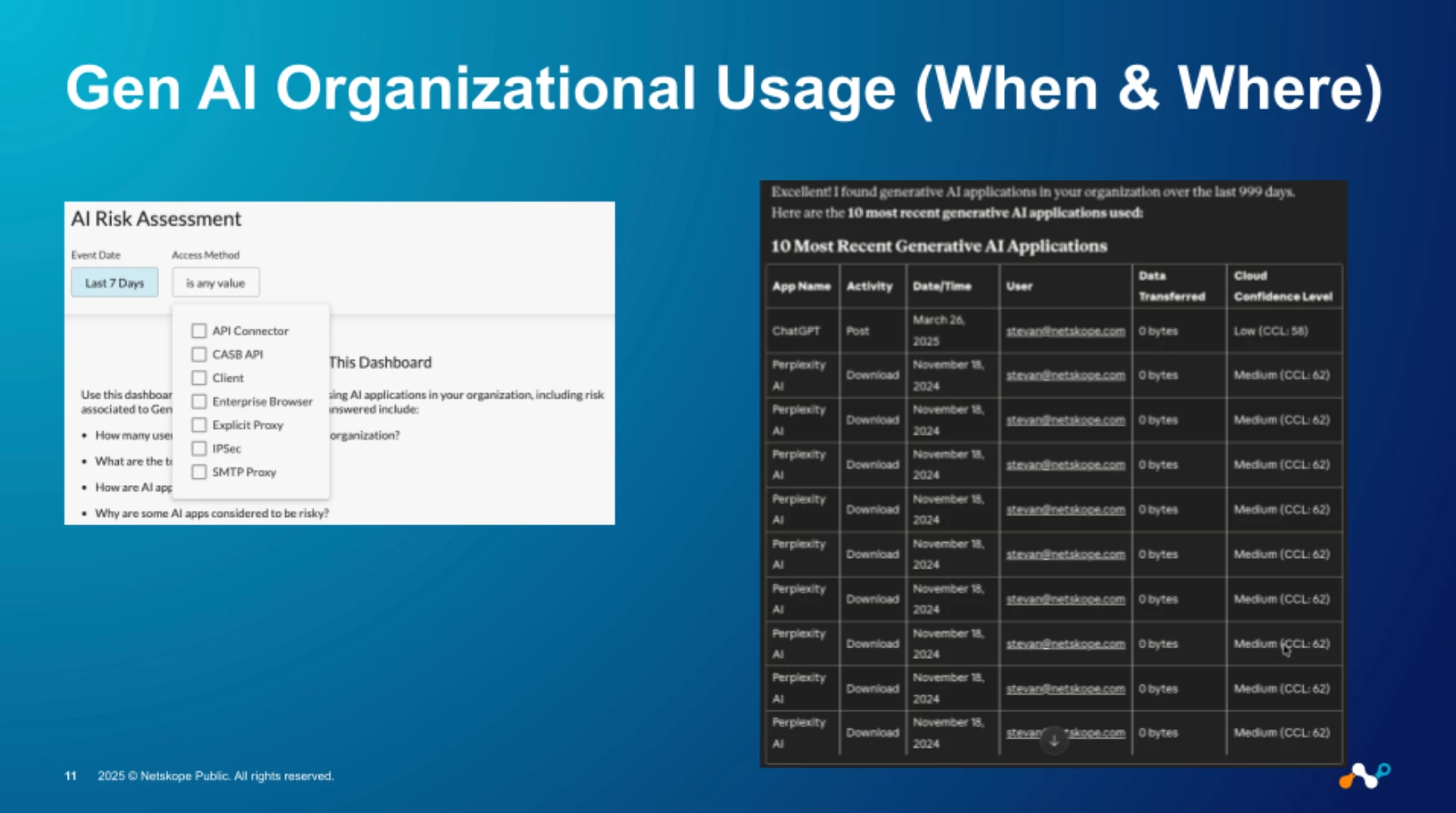

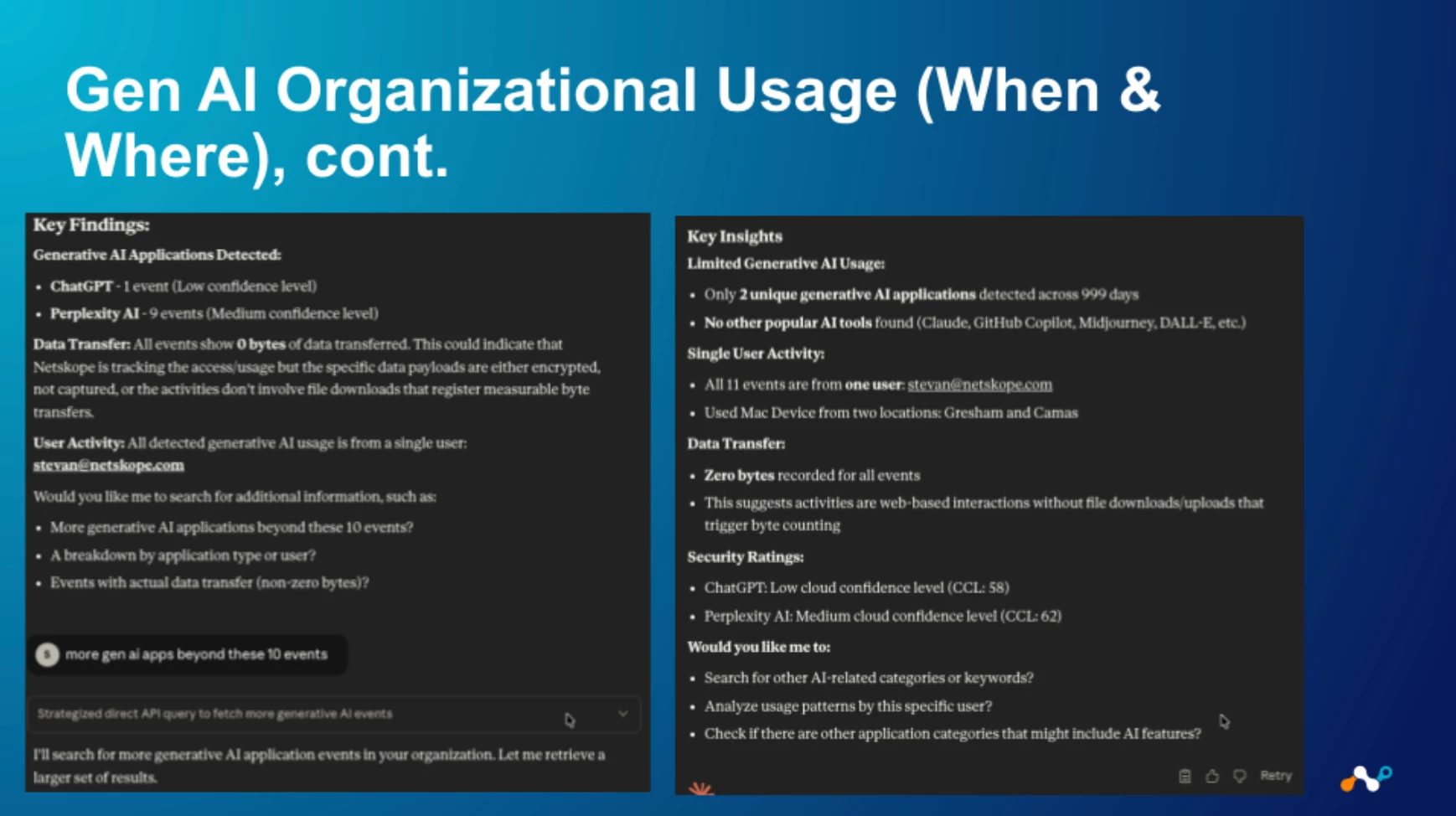

Once we've classified this ecosystem, the next step is to move from awareness to strategic risk management by quantifying and analyzing usage. This is precisely where Advanced Analytics and the AI Risk Assessment dashboard come into play. This transforms raw data into actionable intelligence, allowing security leaders to answer the most critical questions instantly: How many users are accessing risky applications? Which specific applications pose the greatest threat? And, crucially, why are they considered risky? This data-driven approach shows us not just the applications in use, but the user behavior that defines our true risk posture.

Gaining visibility into the hundreds of Gen AI applications being used across the organization is the foundational first step, but it's what you do with that information that truly matters. To move from simple awareness to strategic risk management, you need a way to quantify and analyze this usage in a meaningful way. This is precisely where our Advanced Analytics, specifically the AI Risk Assessment dashboard, comes into play. This dashboard consolidates all the telemetry from AI application usage into a single, comprehensive view, transforming raw data into actionable security intelligence and providing a clear, evidence-based assessment of your organization's overall AI risk posture.

This level of detailed analysis allows security and business leaders to answer the most critical questions about Gen AI adoption instantly. You can immediately identify not just that risky applications are in use, but precisely how many users are accessing them and which specific applications pose the greatest threat. Furthermore, the dashboard provides crucial context into why an application is considered risky, breaking down the specific attributes that contribute to its score. It also offers insights into how these applications are being controlled, showing the effectiveness of current policies and highlighting potential gaps in your security strategy, enabling a proactive and data-driven approach to securing Gen AI.

https://docs.netskope.com/en/netskope-library/

Here are some examples of generative AI applications that would be considered risky from an enterprise security perspective, categorized by the primary type of risk they introduce.

Examples of Risky Generative AI Applications

- Public, Consumer-Grade Large Language Models (LLMs)

Examples: The free, public versions of ChatGPT, Google Gemini, Claude, etc.

Why they are risky

This is the most common and significant risk category. The primary danger is data leakage and intellectual property loss. When an employee pastes sensitive information—such as proprietary source code, unannounced financial data, marketing strategies, or customer PII—into one of these public tools, that data is sent to a third party. The terms of service for many of these tools state that user inputs can be used for model training, meaning your confidential data could potentially be absorbed into the model and inadvertently exposed to other users.

- Unvetted AI-Powered Browser Extensions and Add-ons

Examples: A wide variety of Chrome extensions that promise to summarize web pages, rewrite emails, or provide AI assistance on any site.

Why they are risky

These tools introduce data exfiltration and security risks. An extension from an unknown developer could be malicious, scraping sensitive information directly from an employee's browser as they work in sanctioned applications like Salesforce, Workday, or Office 365. Because they are "always on," they pose a continuous risk of exposing confidential data without the user taking an explicit action like copy-pasting.

- Niche AI Content and Image Generators

Examples: Free online tools that create presentations, generate logos, or create photorealistic images from text prompts.

Why they are risky

The main concerns here are copyright infringement and brand integrity. These models are often trained on vast datasets scraped from the internet, which may include copyrighted material. If your marketing team uses an AI-generated image in a campaign, your company could be liable for copyright violations. Furthermore, the quality and alignment of content from unvetted tools may not meet brand standards, leading to reputational damage.

- Specialized AI Document Analyzers

Examples: Free web-based tools that claim to "summarize your PDF," "analyze legal contracts," or "proofread your thesis."

Why they are risky

These applications are designed to handle what is often the most sensitive type of unstructured data. An employee might upload a confidential M&A document, a legal contract with a partner, or an internal R&D document to a free, unvetted online service. This represents a critical data breach, as the document is now stored on a third-party server outside of your organization's control and security policies.

The When & Where: Adding a Layer of Context

Identifying risky apps and users isn't enough; context is everything. To apply security with precision, we must analyze the when and where of this usage. Our analytics platform provides this granular detail, allowing us to distinguish between activity on a secure, corporate-managed browser versus an unmanaged personal one—a critical distinction, as the risk profile changes dramatically based on the environment.

Looking ahead, as AI becomes more deeply embedded in native desktop applications, we need a more sophisticated line of sight. This is the challenge addressed by the Model Context Protocol (MCP)8, which creates a secure channel between desktop clients, like Anthropic's, and AI models. MCP allows the context sent to the model to be inspected and controlled before it ever leaves the tenant, ensuring proprietary information remains protected.

Now that we understand the importance of identifying what AI applications are in use and assessing their inherent risk, the next critical step is to analyze the context of when and where this usage occurs. The power of an advanced analytics platform lies in its ability to provide this granular detail. For instance, being able to filter AI usage by browser type allows us to distinguish between activity happening on a secure, corporate-managed browser versus an unmanaged personal one. This context is crucial because the risk profile changes dramatically based on the environment. It enables us to move beyond simply identifying a risky application and allows us to build policies that account for the specific circumstances of access, ensuring security measures are applied with precision.

Looking ahead, we recognize that securing AI usage through web gateways is only part of a complete strategy. As generative AI becomes more deeply embedded in native desktop applications and enterprise workflows, we need a more sophisticated, direct line of sight into the data being exchanged. This is the challenge addressed by the Model Context Protocol (MCP), a new industry standard for securely connecting applications, like the Anthropic Claude Desktop client, directly to AI models. Instead of sending raw, sensitive data over the web, MCP allows for a secure channel where the context sent to the model can be inspected and controlled, ensuring that proprietary information is protected before it ever leaves the tenant.

Ultimately, this illustrates a comprehensive, forward-looking security strategy that evolves with the technology. We start with broad visibility and risk assessment through analytics, which gives us immediate control over web-based AI usage today. Then, we look to the future with protocol-level security like MCP, which prepares us for a world where AI is a deeply integrated utility. This holistic approach ensures that no matter how an employee accesses generative AI—whether through a browser today or an integrated desktop app tomorrow—we have the visibility and the fine-grained controls necessary to protect sensitive data, enabling the organization to innovate confidently and securely.

Here are a few examples of how Advanced Analytics and MCP work together to provide the "when and where" for safe generative AI use in your organization, ultimately providing context.

Examples of Providing Context with Advanced Analytics & MCP

- Scenario: Differentiating Between Sanctioned and Unsanctioned Browser Usage

- The "Where" (Provided by Advanced Analytics):

- Observation: The Advanced Analytics dashboard shows that an engineer, Alice, is using a powerful code-generation AI tool.

- Context: By filtering by browser type, the security team sees that all of this activity is happening within the company's managed, corporate-issued Chrome browser, which has all the required security extensions enabled.

- Action: The usage is deemed safe and compliant with policy. No action is needed, and Alice's productivity is not interrupted.

- The "Where (Unsafe Context):

- Observation: The same report shows another engineer, Bob, is using the exact same code-generation AI tool.

- Context: However, the filter shows Bob is accessing it through a personal, unmanaged Firefox browser on his corporate laptop. This browser does not have the company's security controls, and it's unclear what other extensions might be running.

- Action: This is a policy violation. An automated policy can be triggered to block access to this specific AI category from any unmanaged browser, and Bob can be coached on the correct, secure way to access the tool.

- The "Where" (Provided by Advanced Analytics):

- Scenario: Protecting Proprietary Data with Protocol-Level Security

- The "When" (Provided by MCP):

- Observation: A financial analyst, Carol, is using the native Anthropic Claude desktop application to help analyze a confidential quarterly earnings report before it's made public.

- Context: Because the desktop application is communicating via MCP, the security platform has a direct line of sight into the data *before* it's sent to the AI model. The platform's DLP engine inspects the context in real-time.

- Action: MCP identifies that Carol's prompt includes the phrase "Project Nightingale Draft Earnings Q3," which is a highly confidential keyword. The policy automatically redacts this sensitive project name and other specific financial figures from the prompt before it leaves the tenant. Carol gets the analysis she needs without ever exposing the most sensitive parts of the report. The "when" of the data transfer is the point of control.

- The "When" (Provided by MCP):

- Scenario: Combining Analytics and Future-State Controls for Holistic Security

- The "Where" and "When" (Working Together):

- Observation (Analytics): Advanced Analytics identifies a new, unclassified AI application for video synthesis that is gaining popularity among the marketing team. The application is entirely web-based.

- Immediate Action (Analytics): The security team can immediately place controls on this application. They might set a policy that allows employees to upload generic images but uses DLP to block the upload of any video files containing internal watermarks or employee faces until the tool can be properly vetted. This controls the "where" (the web) for now.

- Future-State Action (MCP): The security team learns that this video synthesis company plans to release a desktop application that will support MCP. They can now proactively build a security policy for this future application. The policy could state that when the MCP-enabled desktop app is used, it can access the company's approved library of marketing assets, but it is blocked from accessing the engineering team's file shares. This prepares the organization to safely adopt the next evolution of the tool by controlling the "when" and "where" of data access at a protocol level.

- The "Where" and "When" (Working Together):

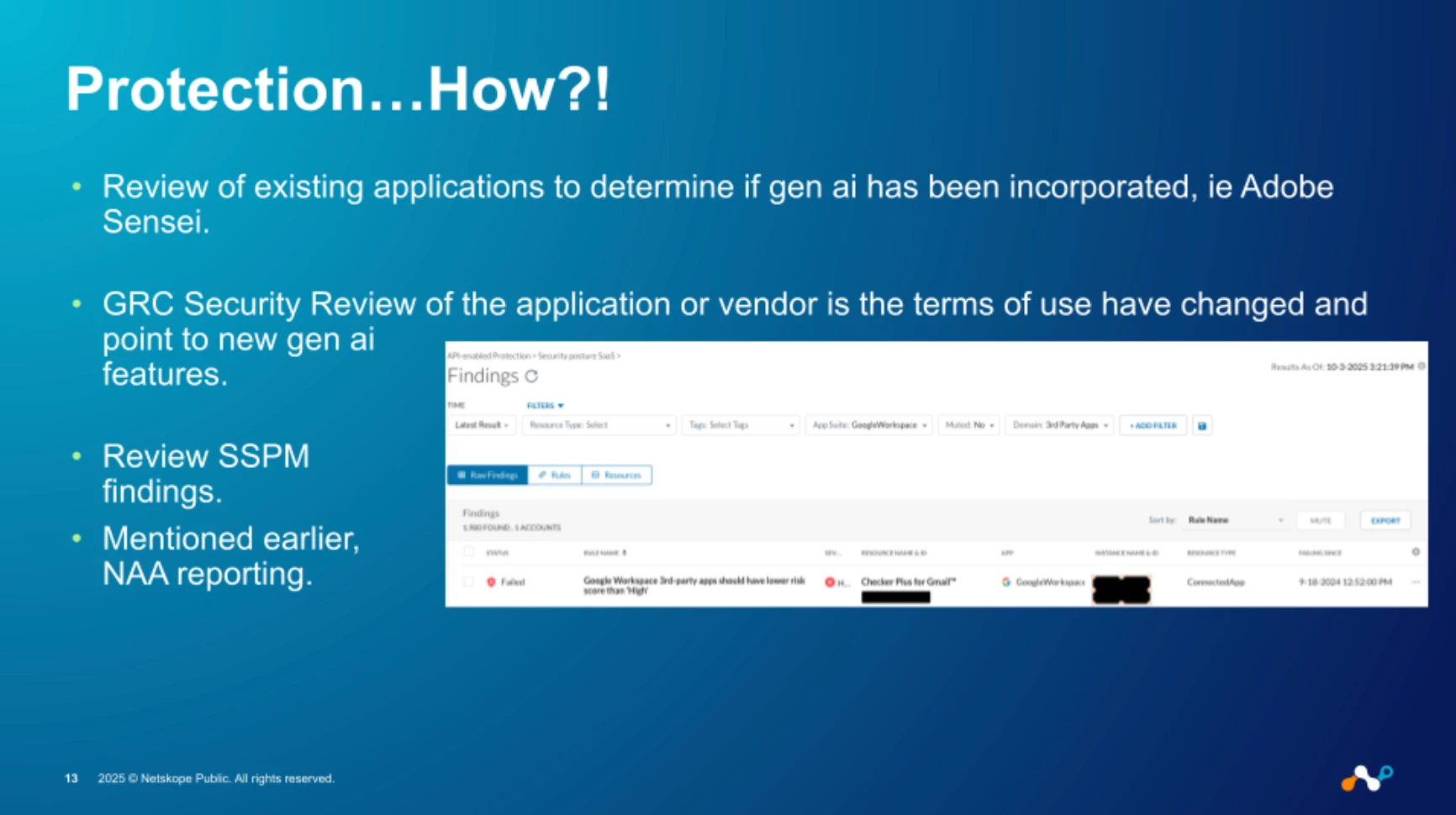

The How: Proactive Protection and Real-Time Controls

With a clear understanding of the what, who, when, and where, we can finally answer the most important question: How do we protect our organization? This is where we translate intelligence into active defense. The first step is to review our existing security posture, from SSPM findings to GRC reviews of vendor terms, to determine where Gen AI has been incorporated into applications we already use.

Armed with this comprehensive insight, we can then build adaptive, real-time policies. We can start by controlling access to applications based on their risk level or CCL score, initially blocking unknown or poor applications while allowing specific, sanctioned activities. As we gain confidence and understanding, we can surgically increase the number of permitted activities—like allowing posts and previews but blocking uploads or downloads. This approach allows us to enable our users to innovate safely, coaching them in real-time and protecting our most sensitive data without hindering progress.

Conclusion: Enabling Innovation with Confidence

In conclusion, ensuring the safe use of Generative AI is not about blocking a new category of technology. It's about embracing it with a comprehensive security strategy. By following a logical framework—discovering the what, assessing the who, understanding the when and where, and implementing the how—we transform a potential source of risk into a powerful business enabler. This holistic approach of visibility, contextual analysis, and adaptive, real-time controls allows us to protect our most sensitive data while empowering our organization to innovate with confidence and security.

References

- https://en.wikipedia.org/wiki/ELIZA

- https://towardsdatascience.com/text-generation-with-markov-chains-an-introduction-to-using-markovify-742e6680dc33/

- https://iopscience.iop.org/article/10.1088/2632-2153/ad1f77

- https://liacademy.co.uk/the-story-of-eliza-the-ai-that-fooled-the-world/

- https://www.canva.com/magic-write/

- https://kantrowitz.medium.com/how-spotify-will-handle-and-harness-generative-ai-ccd9ec89043d

- https://blog.cloudflare.com/response-to-salesloft-drift-incident/

- https://www.netskope.com/press-releases/netskope-expands-ai-powered-platform-capabilities-including-new-copilot-for-optimizing-universal-ztna

Past Inside Netskope covering Gen AI

*Parts of this article were generated with the assistance of Gemini Pro.