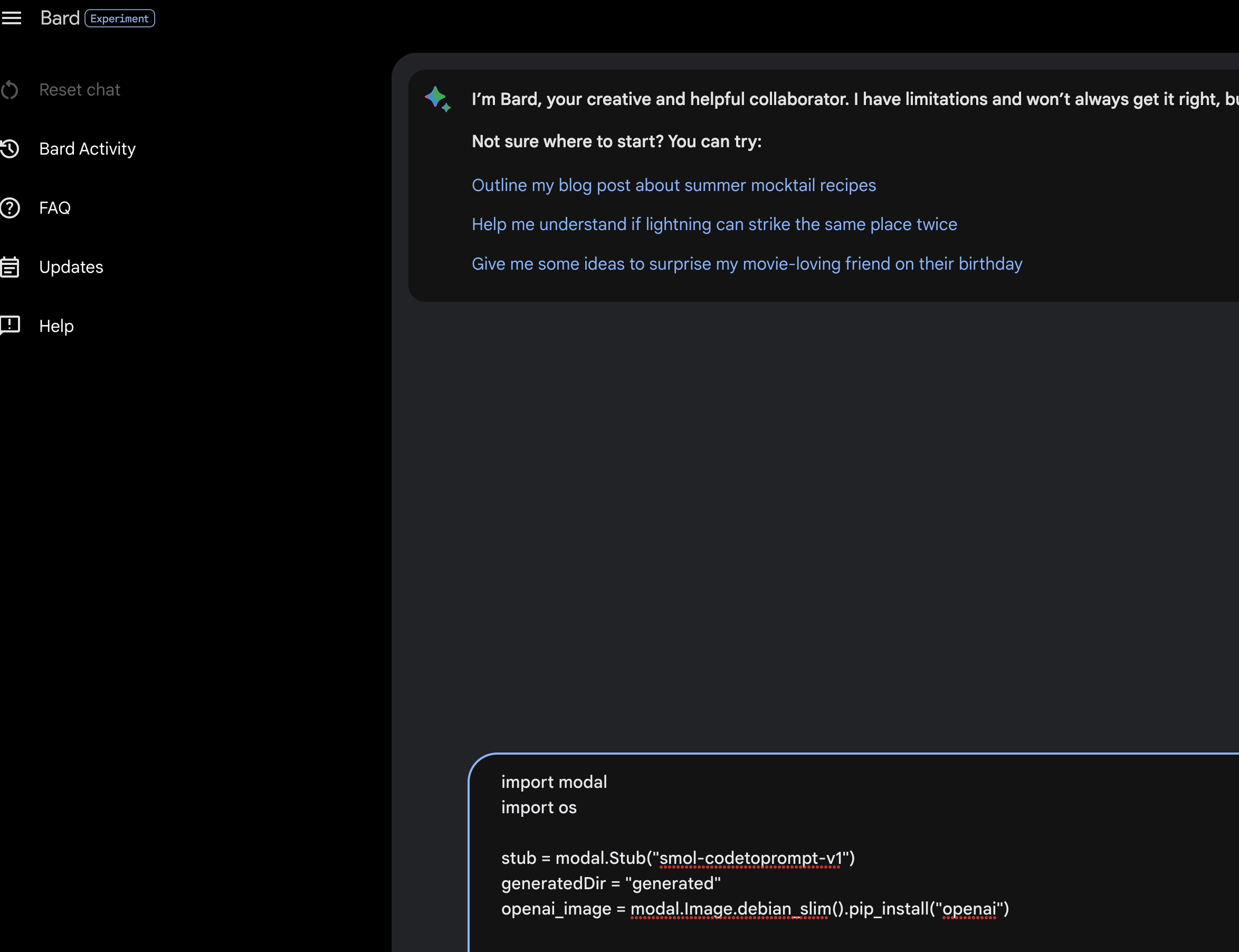

As the AI wars heat up, Google is stepping up its game against OpenAI with the release of Bard.

Google originally (early 2023) made it difficult for the average user to play around with Bard by introducing an invitation based system resulting in waitlists, again this was earlier this year when ChatGPT rocketed into the mainstream consciousness, but as it stands today, most, if not all Google account holders can access, play around, I mean, leverage Google Bard.

What are the risks involved for enterprises ? The reality is that it's not any different than the risk of using an unsanctioned app. I mean data exfiltration capabilities, privacy issues et al, makes Google Bard a veritable minefield for every security organization.

Google explicitly advertises Bard as way to make life simpler for software devs. This is the biggest risk I see for most enterprises when it comes to Bard and other similar platforms. The risk of devs copy/pasting sensitive code for debugging, and/or understanding them is huge. Hence the call for action.

OK, I get it, what now, you say?

Using Netskope you have the ability to outright block access to Bard (tough pill to swallow for most users), or you can give them some leeway and just block sensitive movement of data.

- Outright block : This is pretty straightforward. Create a URL List, followed by a custom. category and utilize this in your real-time protection policy to implement your block action.

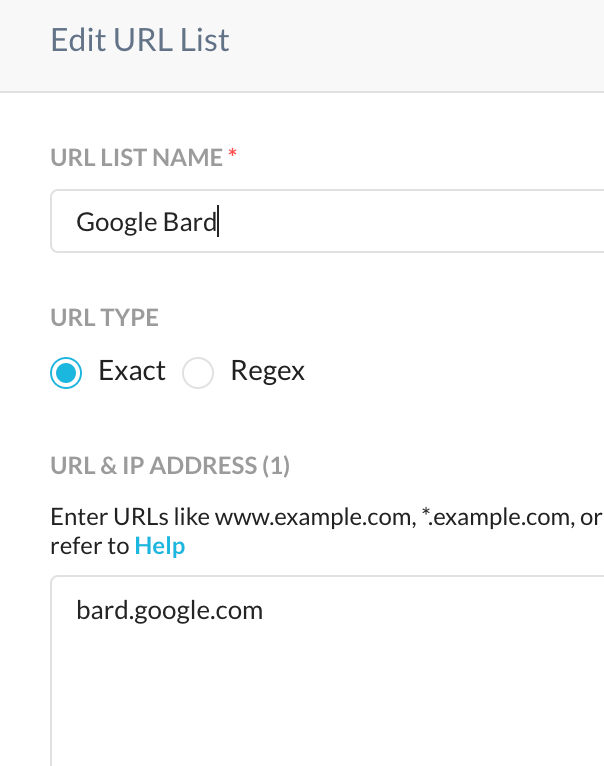

A URL List like the following should suffice. For help with URL lists, refer to the following page in our documentation portal.

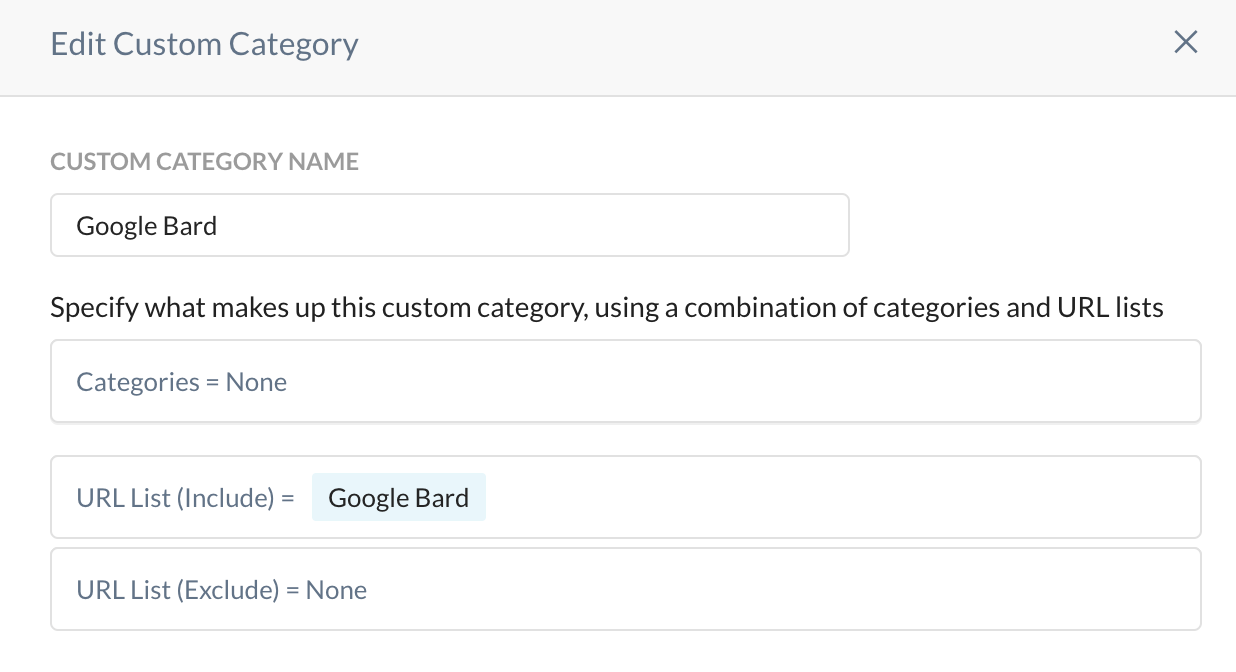

Next, create your custom category which has the URL list that you've just created

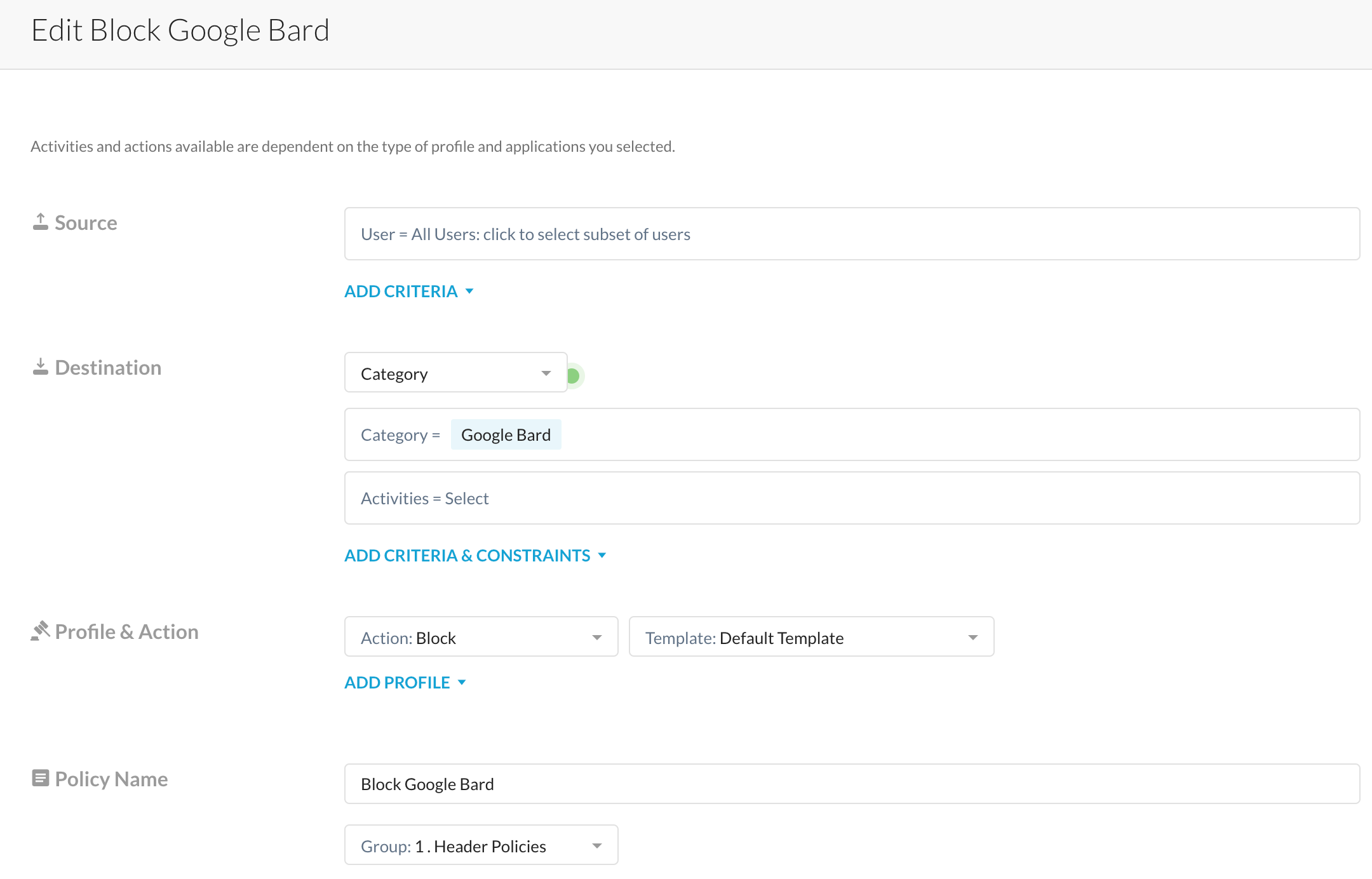

Place this created category in a real-time protection policy and you should be done.

Note that I don't really care about 'activities' here. If a user navigates to bard.google.com, they get a block message, no questions asked.

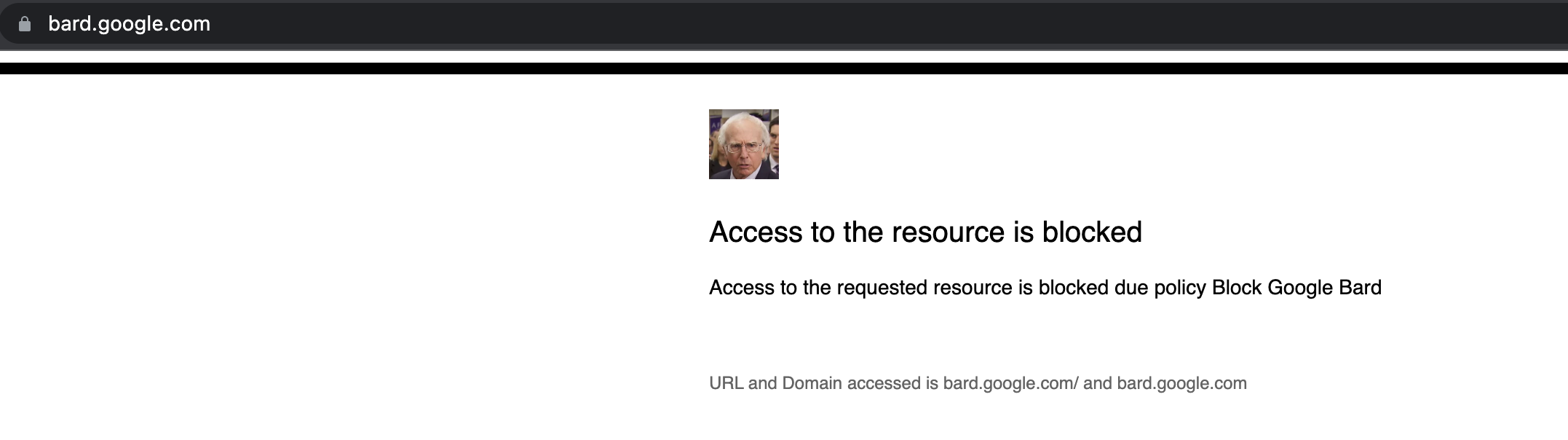

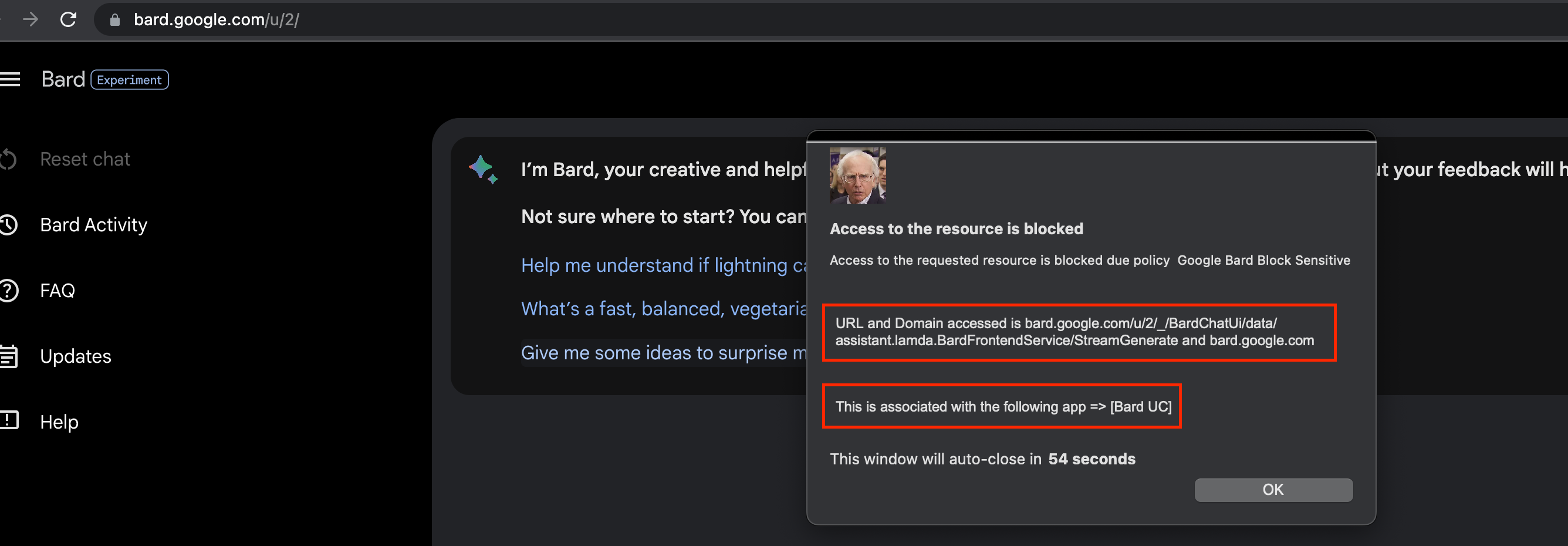

As you can decipher from the block message below, if a user hits the bard URL by any means - a direct hit, from a search engine, or even from within google's app catalog, they get a block notification.

- Sensitive Content (DLP) + Content size based blocks : The intention with this exercise is to allow users to 'chat' with Bard, but prevent certain sensitive content to move to Bard. This way, the ordinary user may continue to use Bard as a chatbot - btw, I am not too concerned with this use case, as a user may otherwise rely on a search engine, and Bard is just an extension of the usual Google Search workflows.

Anyways coming to my original intention

>> With this use-case, I'd like to block sensitive content (source code to be precise) from being shared on Bard, specially large blocks of code - which to me would be a risk to my enterprise - a risk of exfiltration/movement of confidential proprietary content outside my organization

How do I do it ? Using the Netskope Universal Connector.

With ChatGPT, my colleagues originally described a similar methodology for Netskope to identify communication to ChatGPT and subsequently Netskope released a formal "out of the box" connector to detect user activity within ChatGPT.

With Google Bard, we have the ability to detect activities (form post of data) onto Bard using a custom app definition that takes a couple of minutes to setup, with formal "out of the box" support coming very soon.

Step 1

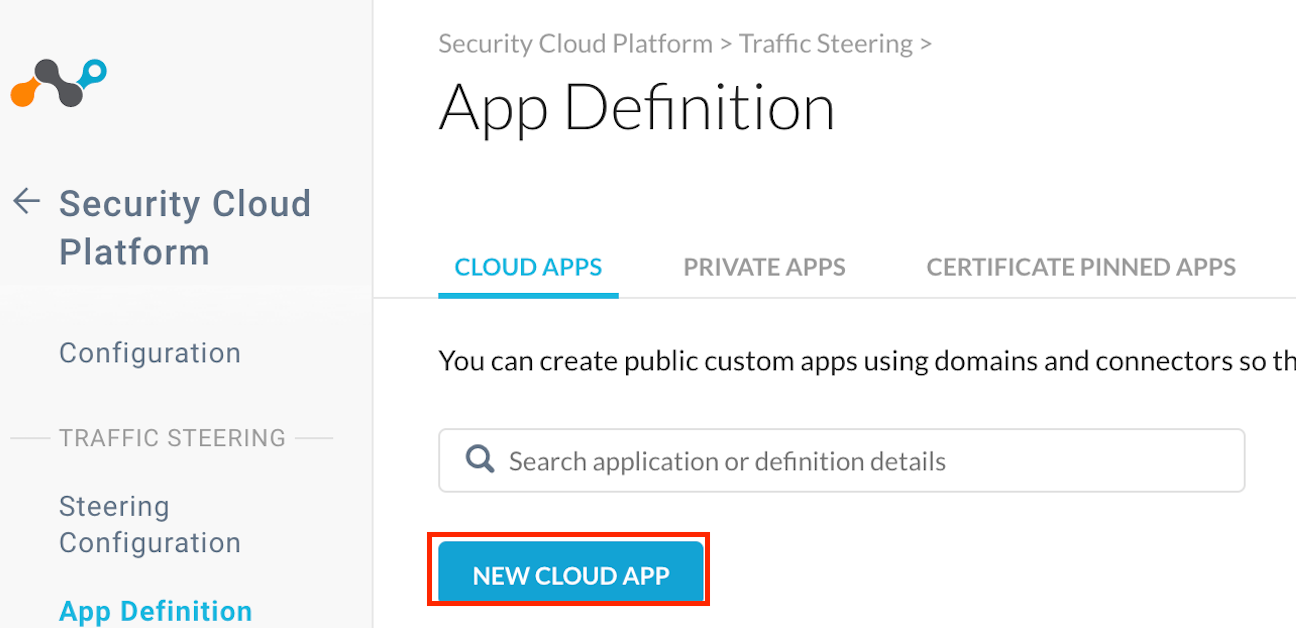

Navigate to Settings > Security Cloud Platform > Traffic Steering > App Definition and define your custom cloud app.

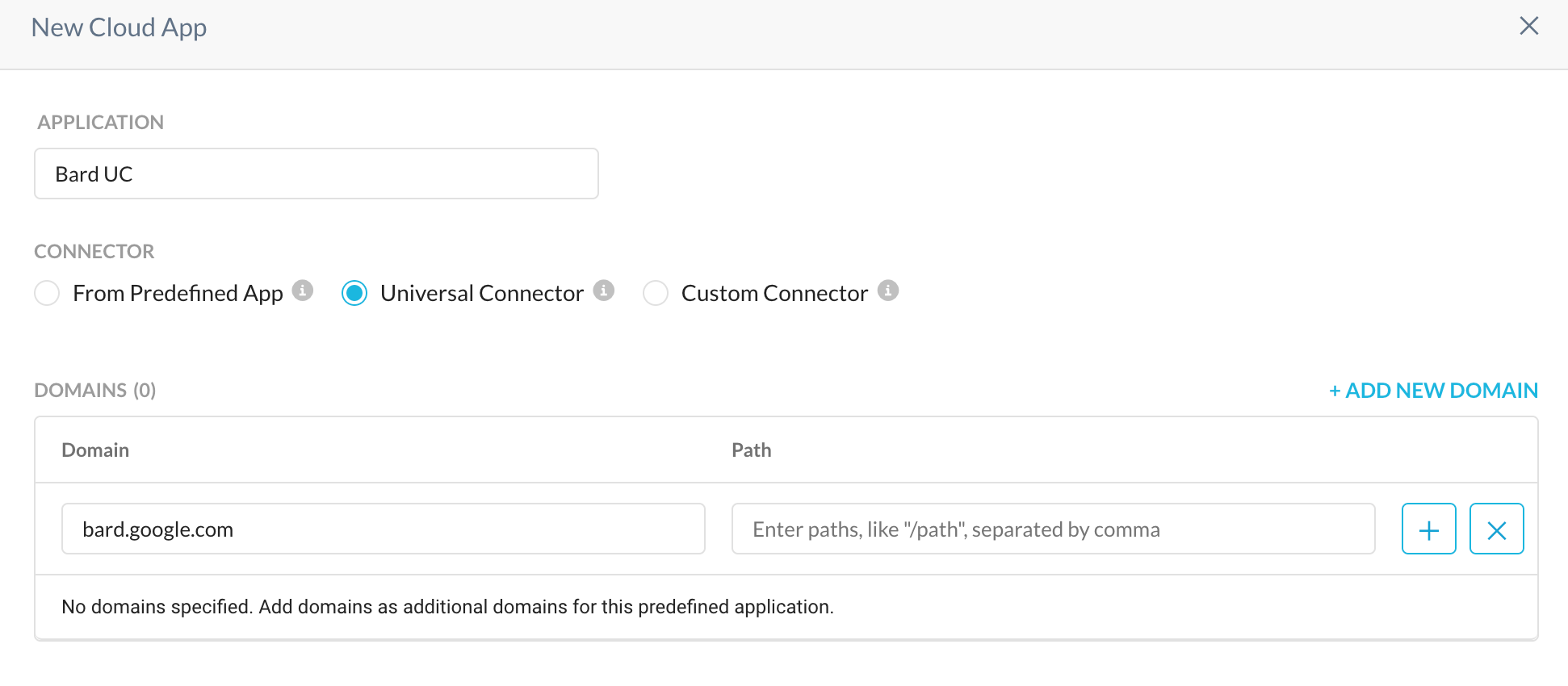

Whilst defining your cloud app, chose the Universal Connector and specify bard.google.com as the domain.

There's no need to add a path for now, select the + icon once you've typed in the domain and save this definition.

Once complete, you should get an update notice on your Netskope client and select update to proceed to update your client.

Step 2

Utilize this app within your real-time protection policy with DLP/other inspections in place

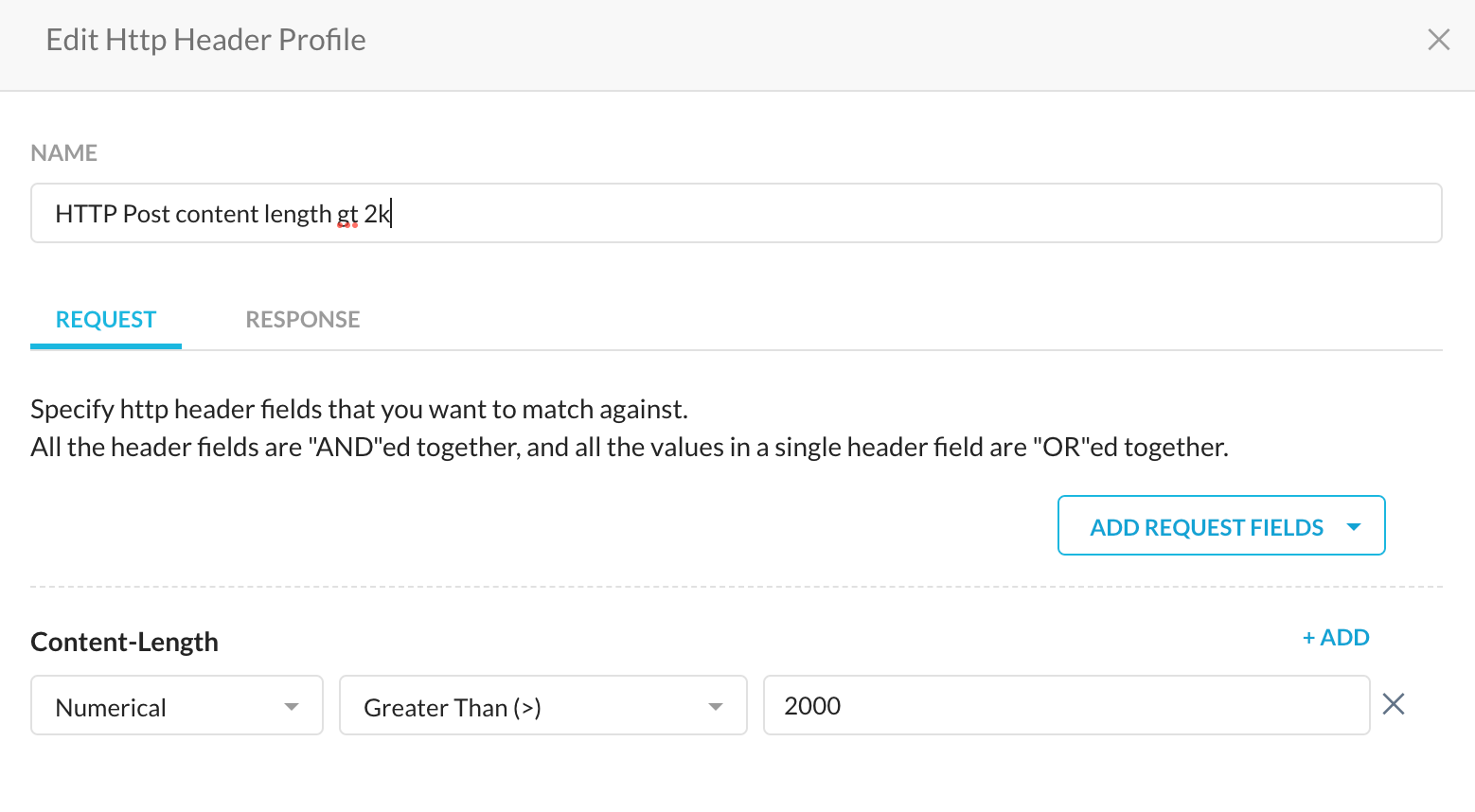

In my case I have a HTTP Header Profile that's looking at content length of the HTTP header. This way I'm able to allow 'small' transactions with Bard, but blocking large movements of data.

I have selected an arbitrary length of 2000 bytes.

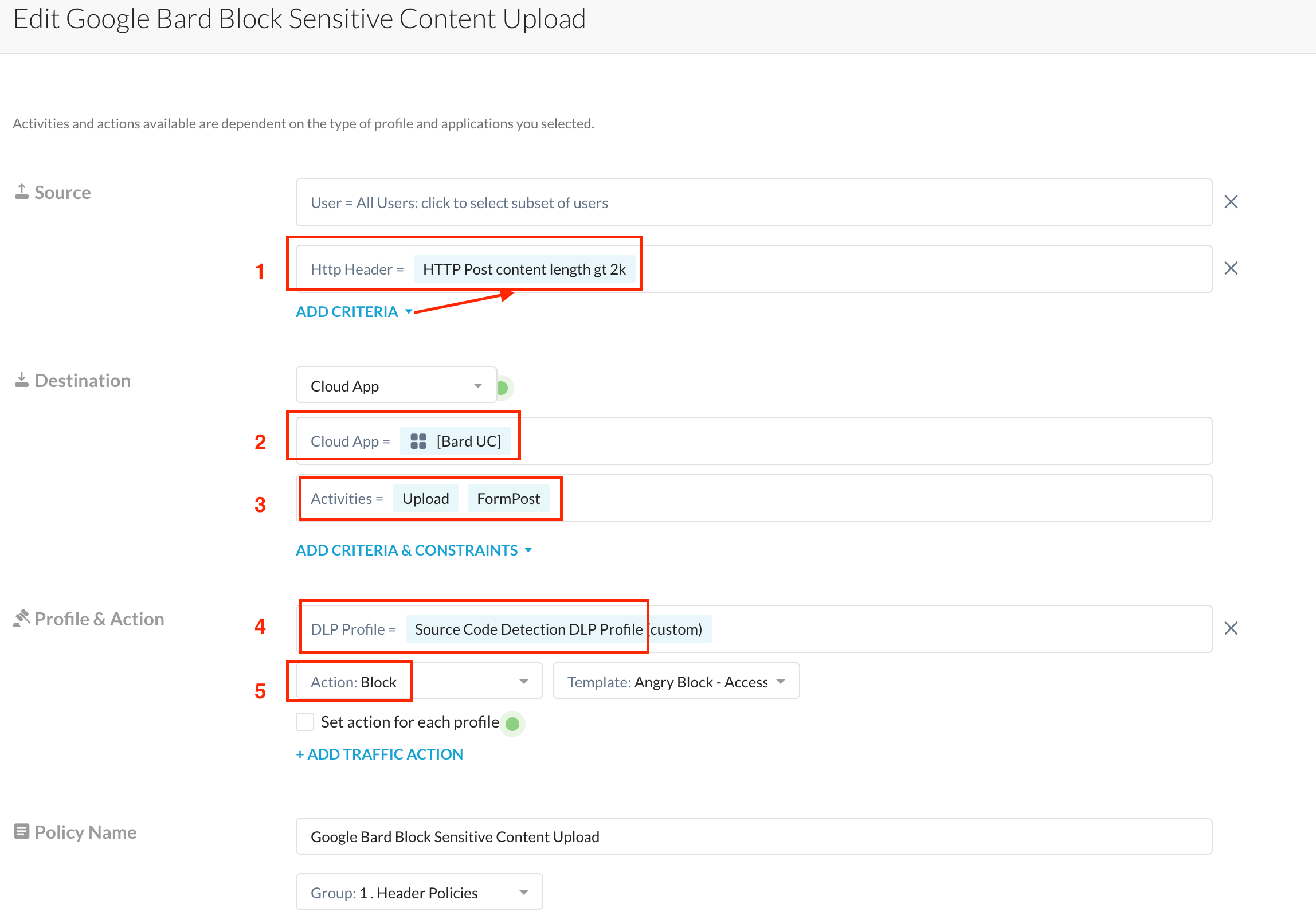

My real-time protection policy looks like the following.

I select the HTTP Header Profile (1) described above, select my destination as Bard UC (2) which is the name of my "app definition", selected activities (3) - the available options depend on what the Universal Connector deciphers - in this case, it gave me an option to select Upload, Download and FormPost. I've made my choices and I move on to select the DLP profile(4) I want to be used in the data inspection. I chose a custom profile (source code classifier) from our range of available ML based Classifiers - this is described here.

I subsequently chose an action (5) to block if a detection is made.

The logic here is if a match is made against for my HTTP header profile + DLP Profile, only then is there a block implemented - i.e. if I post a large amount of code, I get a block, if not, using Bard is ok.

Test results

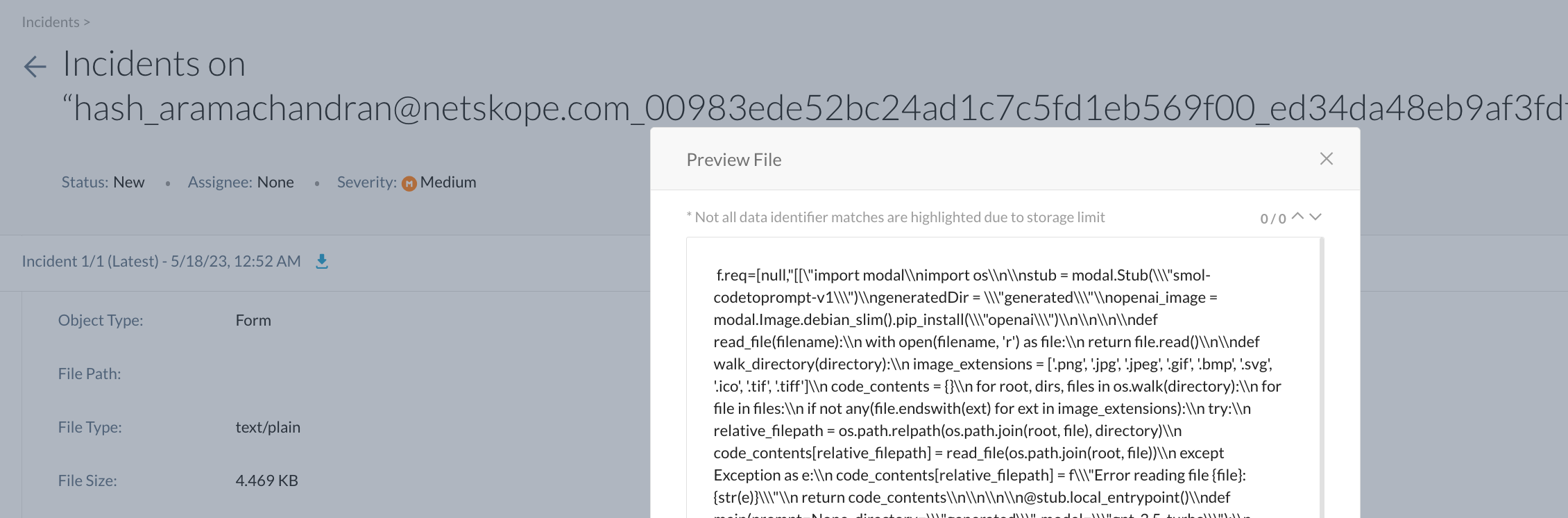

I attempt to paste a 83 line Python program on Bard as I want to test it for bugs

Alas Netskope blocked me 🙂

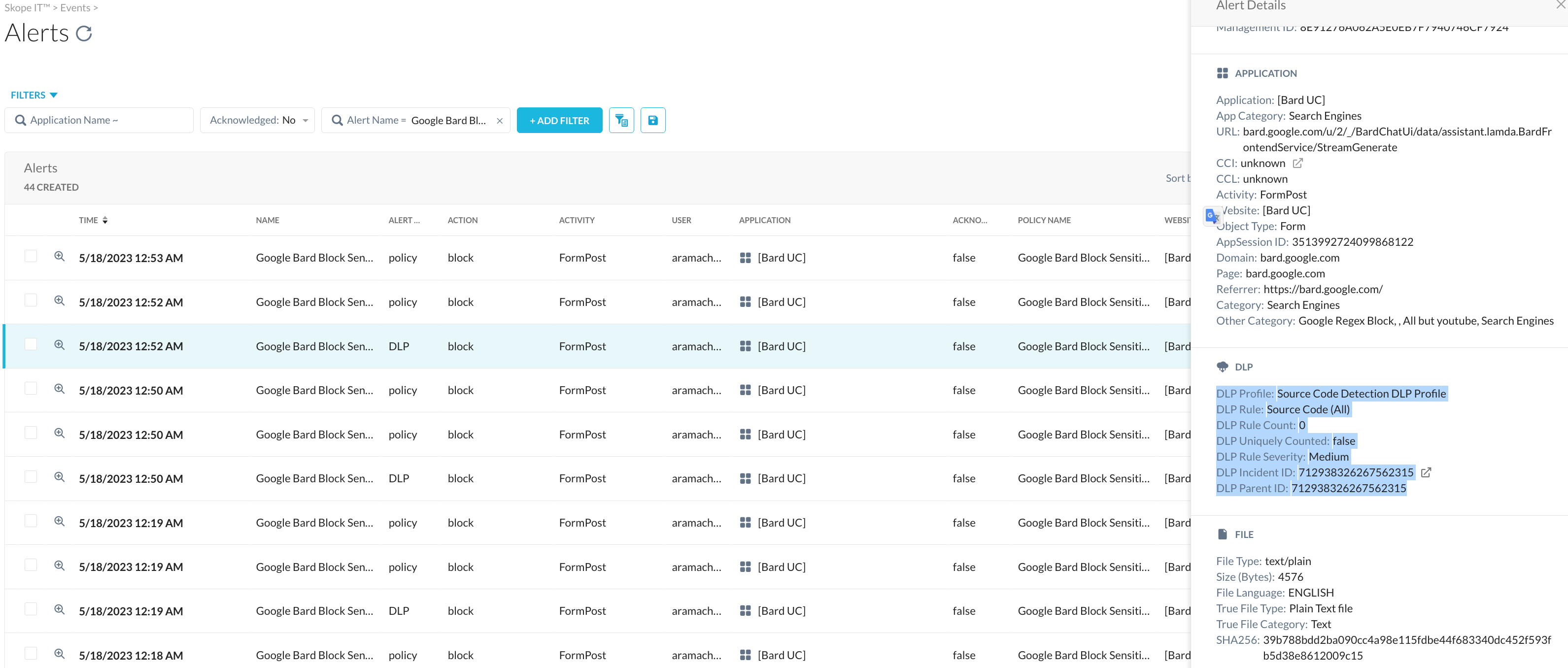

Details for the block - the reasons, to be precise can be found in the alerts page which references the DLP rule that was matched for this detection - source code

I can drill down into the incidents page to view the forensic data i.e. to inspect the code shared on Google Bard.

With that we're done - With Netskope you can chose to block Google Bard outright, or be targeted in your approach to data protection. I prefer the latter, what about you?

Ajay Ramachandran